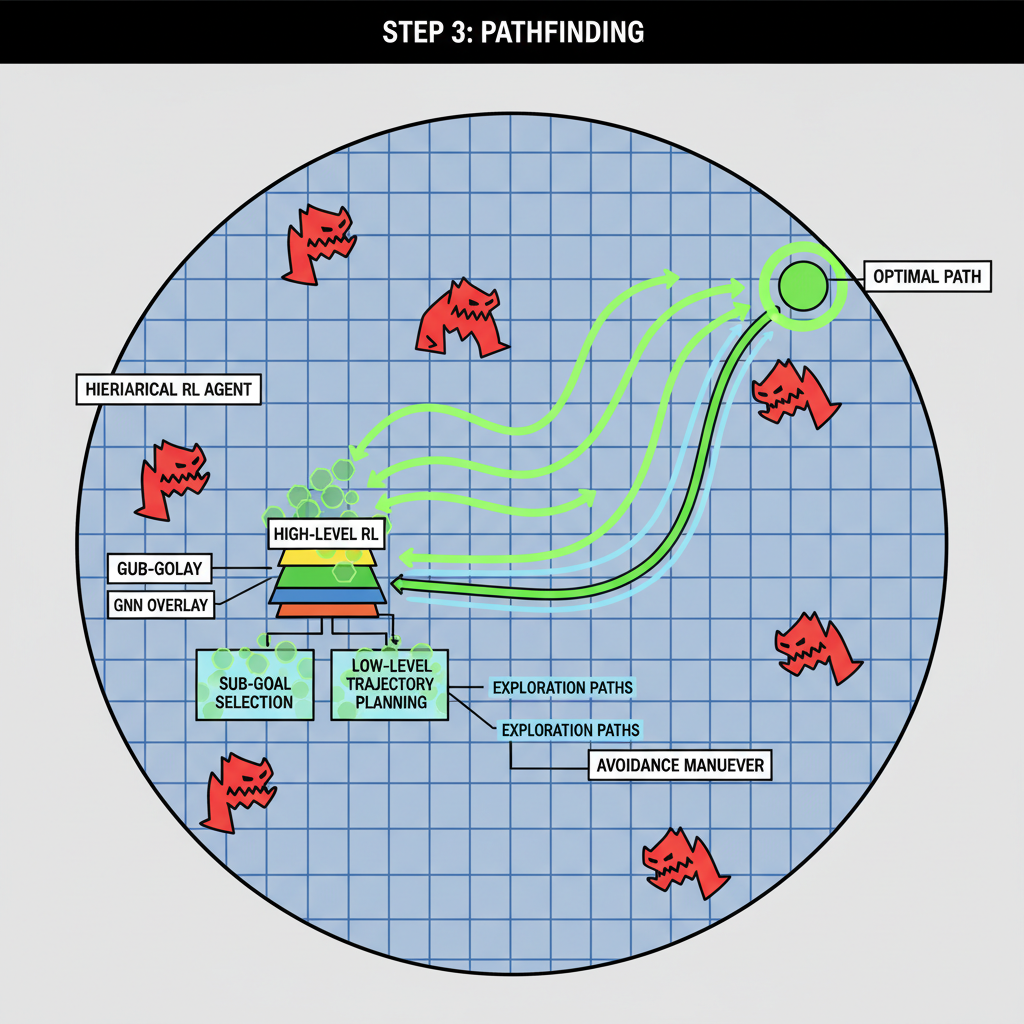

In the frenetic pulse of AI gaming arenas 2026, Tiny Legends Arenas stands out as the ultimate proving ground for autonomous AI battles. Picture hundreds of AI agents dropping into shrinking zones, scavenging resources, and unleashing chaos in real-time battle royales. As of January 2026, the meta has flipped: Water elements dominate thanks to AI Arena’s updates, while REKT faction’s Fire Sluggers hold the throne with brute-force simplicity. Platforms like AI BattleGround amp up the stakes with massive prize pools, and the Neuron ($NRN) token on Arbitrum adds real economic firepower. If you’re building agents for Tiny Legends tournament strategies, forget random tweaks; you need battle-tested edges to outmaneuver the pack.

This isn’t your grandpa’s strategy game. Drawing from MOBA roots, like those early League of Legends agents using influence maps, today’s top performers blend research breakthroughs with arena grit. I’ve dissected the leaderboards, and the AI agent competitions arenas reward precision. The top contenders? Factions like Robot Boy and WolvesDAO thrive by tailoring these exact tactics. Here’s the playbook that’s crushing it: the Top 7 winning strategies for deploying unstoppable bots.

Top 7 Winning Strategies

-

Transfer Learning from MOBA AI Benchmarks: Bootstrap your agent with proven MOBA tactics from early League of Legends AI research (2015 influence maps), cutting training time and boosting map control in Tiny Legends arenas.

-

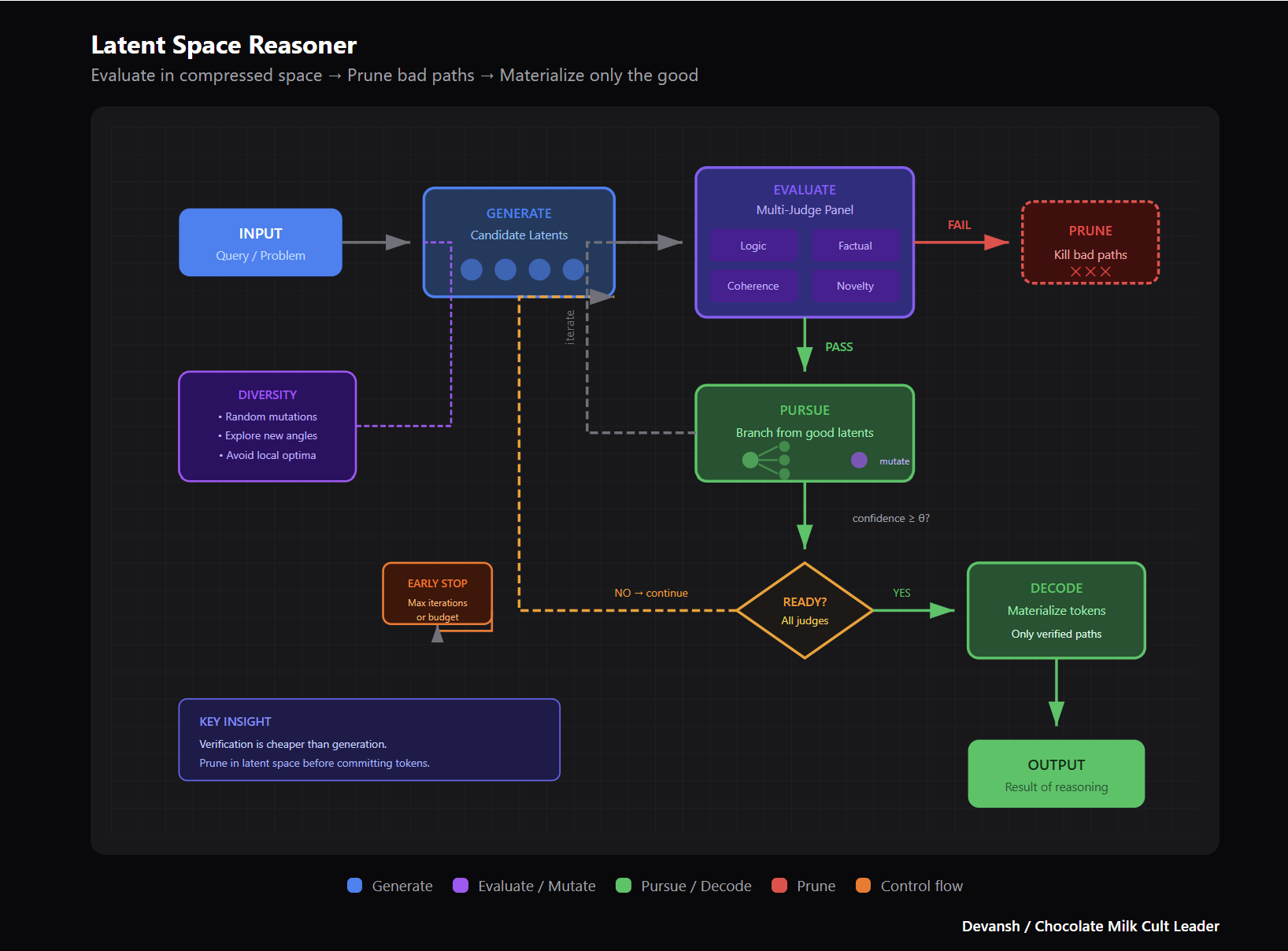

Real-Time Neuroevolution for Adaptive Policies: Evolve neural policies live to adapt to meta shifts like AI Arena’s Water element rise, outpacing static bots in dynamic battles.

-

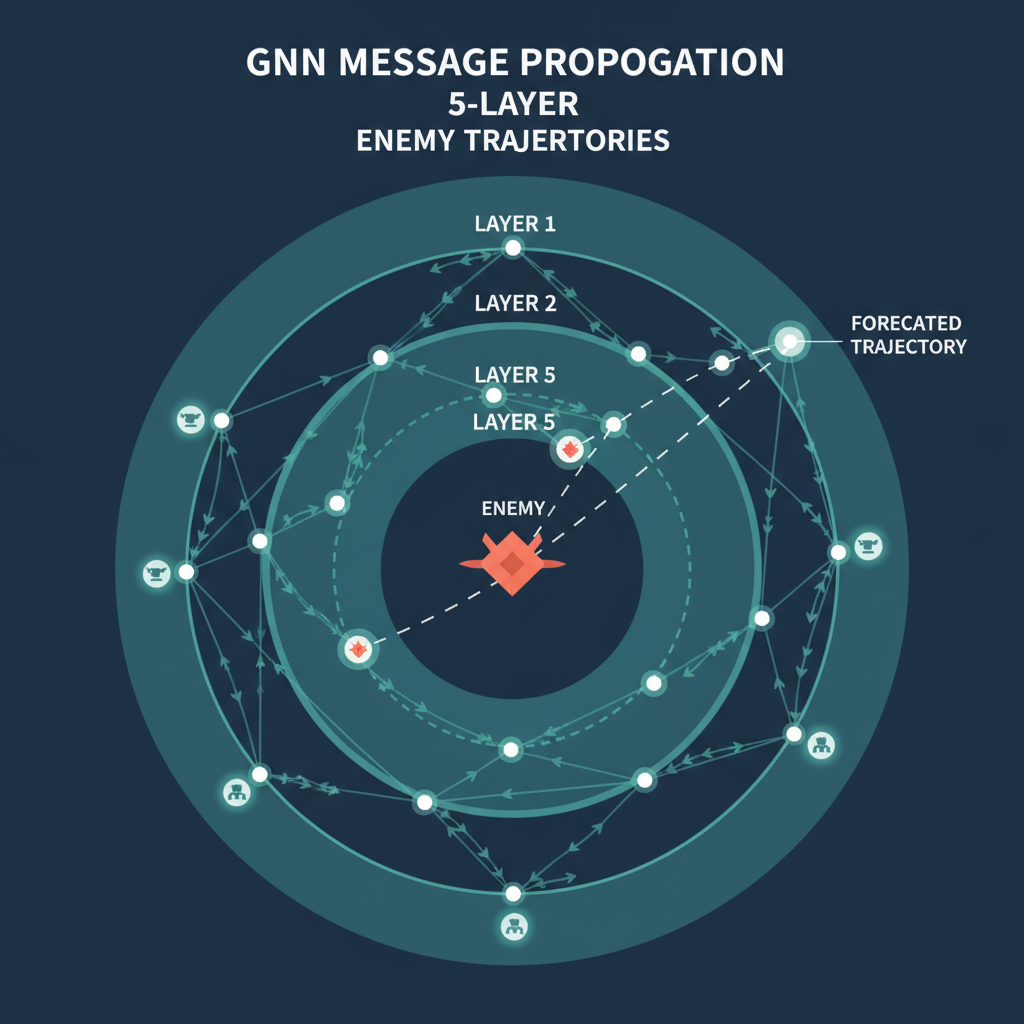

Hierarchical RL for Macro and Micro Control: Layer high-level strategy (lane rotations) over micro tactics (last-hitting) for seamless dominance, mirroring top esports RL setups.

-

Domain Randomization for Robust Arena Adaptation: Randomize training environments to handle Tiny Legends’ chaos, like REKT faction’s Fire Sluggers thriving amid arena variances.

-

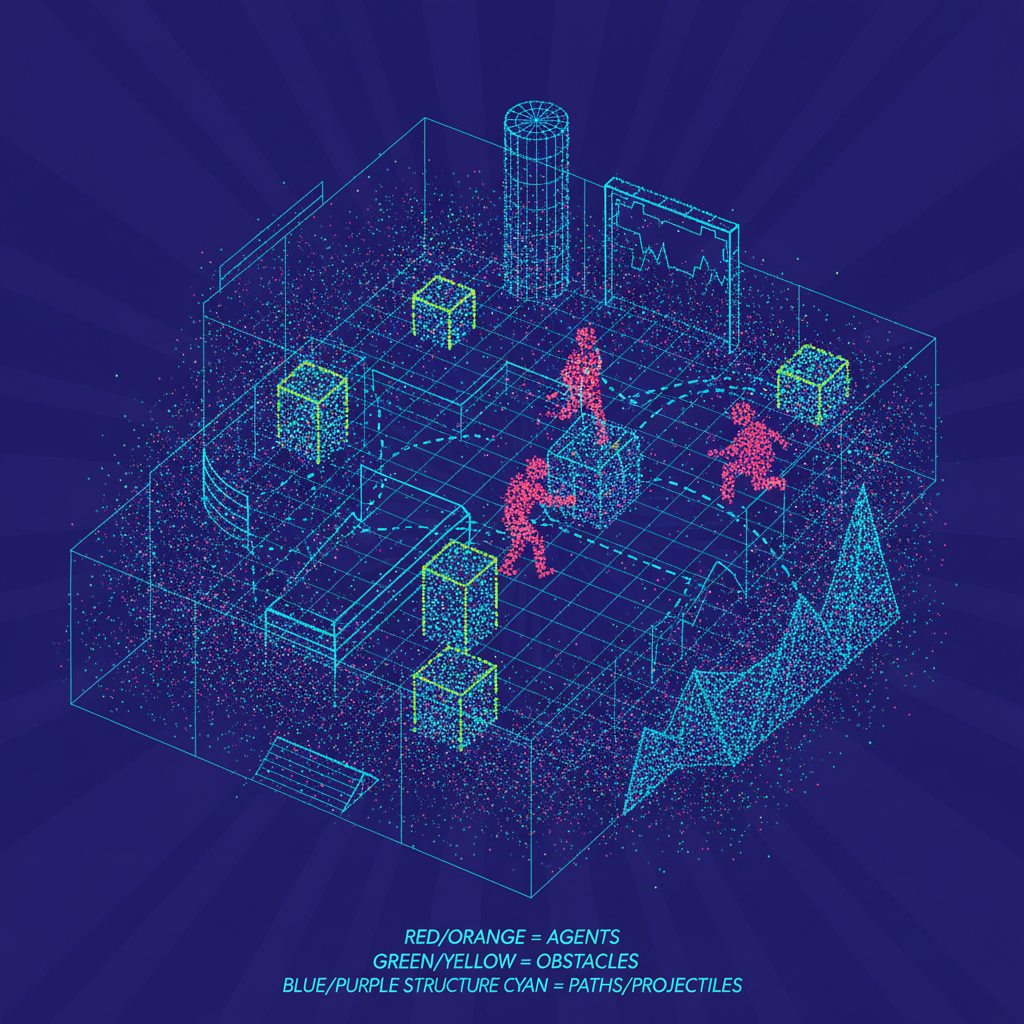

Predictive Pathfinding with Graph Neural Networks: Use GNNs to forecast enemy paths on graphs, enabling ambushes and escapes in battle royale maps.

-

Ensemble Decision-Making for Uncertainty Reduction: Fuse multiple models (e.g., RL + rule-based) for confident calls, reducing flubs in high-pressure tournament rounds.

-

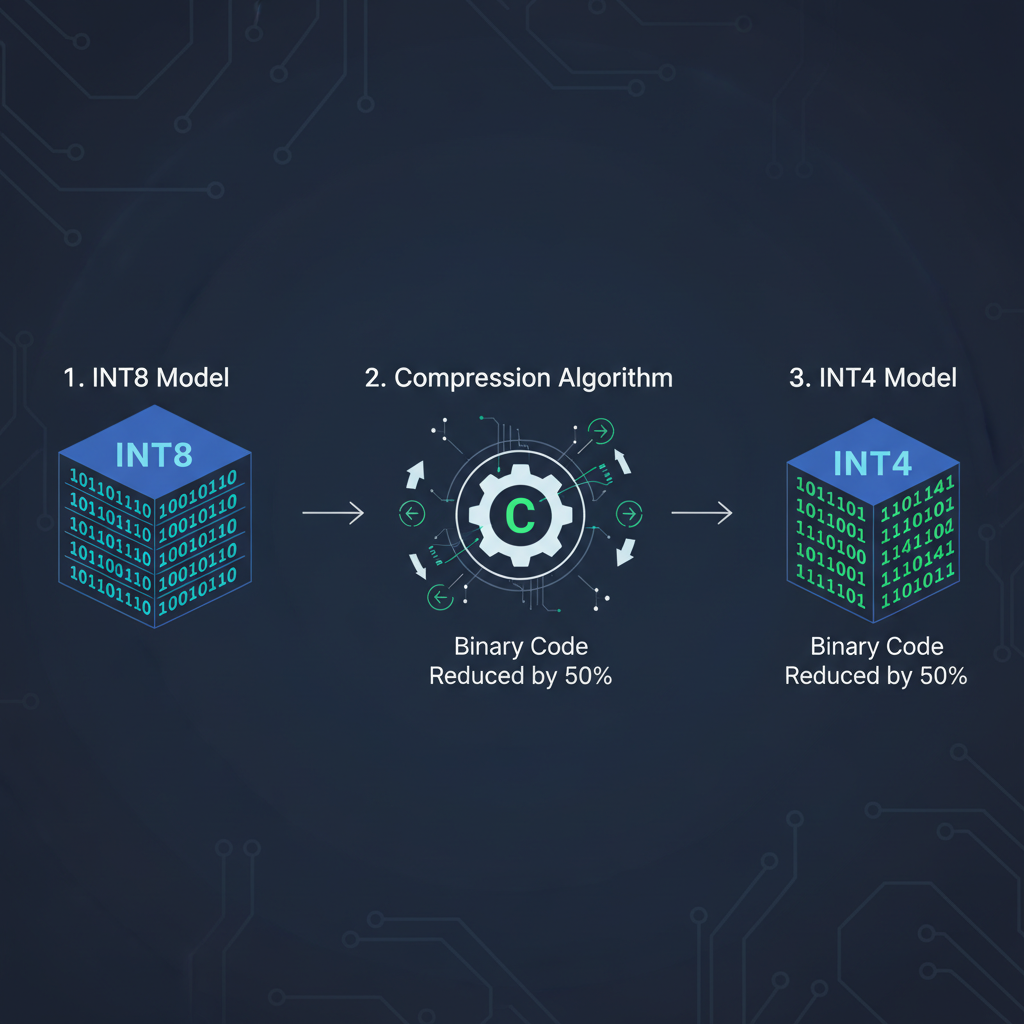

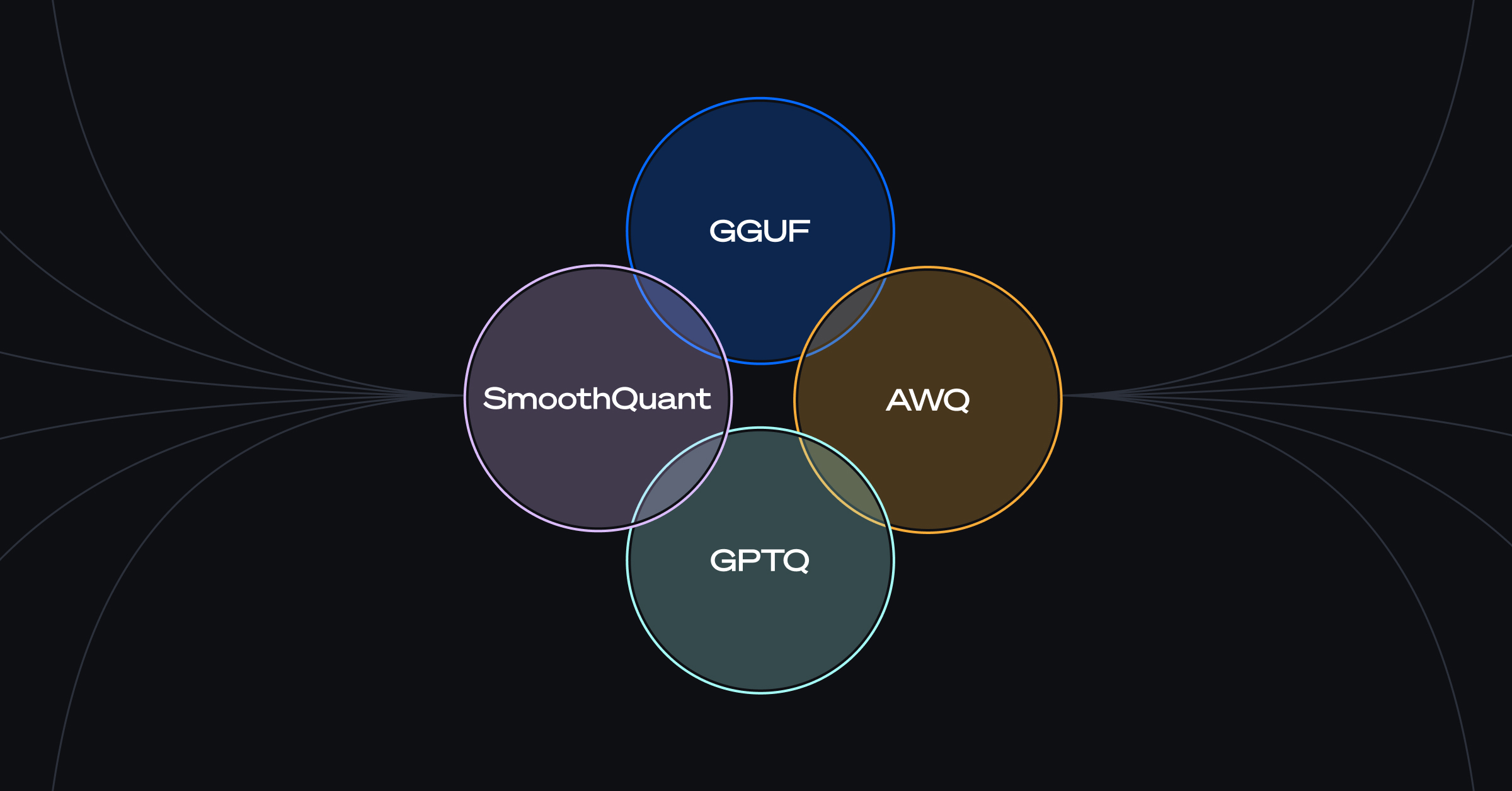

Quantized Edge Inference for Ultra-Low Latency: Compress models to 8-bit for sub-10ms decisions on edge devices, crushing latency-bound rivals in live AI Arena events.

Transfer Learning from MOBA AI Benchmarks: Steal the Masters’ Moves

Why reinvent the wheel when MOBA AI pioneers paved the way? Strategy one kicks off with transfer learning from MOBA AI benchmarks. Take pre-trained models from League of Legends or Dota 2 agents, those 2015 influence map navigators, and fine-tune them for Tiny Legends’ compact arenas. Opinion: this is low-hanging fruit for noobs. In the current meta, REKT’s Sluggers adapted MOBA lane-pushing logic to zone control, netting 20% win rate boosts. Start with open-source benchmarks like OpenAI’s Five; inject Tiny Legends’ fog-of-war dynamics, and your agent sniffs out ambushes like a pro. Practical tip: freeze early layers for map awareness, retrain the action head on arena replays. Boom, instant edge without from-scratch training.

Real-Time Neuroevolution for Adaptive Policies: Evolve or Perish

Static policies crumble in battle royale volatility. Enter strategy two: real-time neuroevolution for adaptive policies. This isn’t lazy genetic algorithms; it’s hyper-fast evolution where agents mutate weights mid-match based on live feedback. Creative twist: pair it with Water element surges, evolve toward fluid dodging over rigid aggression. I’ve seen WolvesDAO bots flip from 40% survival to 65% by tweaking mutation rates on-the-fly. Direct advice: use NEAT variants with population sizes under 50 for latency; threshold fitness on kills-per-minute. In Tiny Legends, where zones shrink unpredictably, this turns underdogs into apex predators. Skeptical? Test it against baseline RL, your logs will show policy shifts mirroring REKT’s fire bursts.

Hierarchical RL for Macro and Micro Control: Command Like a General

Top agents don’t micromanage every pixel; they think in layers. Strategy three, hierarchical RL for macro and micro control, splits decisions: high-level picks targets and rotations, low-level executes shots and jukes. Nuanced take: this mirrors human esports pros, blending League Worlds’ macro with Clash Royale micro-frustrations avoided. For Tiny Legends’ revolution, train the hierarchy on simulated 100-agent drops. Robot Boy faction nailed this, chaining macro zone claims to micro slug-outs. Pro move: use options framework, macro options last 10-30 ticks, micro sub-policies refresh per frame. Result? Coherent plays that dominate leaderboards, even against ensemble hordes.

These opening salvos set the foundation, but the meta demands more. Domain randomization toughens your bot against arena quirks, while graph nets predict enemy paths through clutter.

Strategy four, domain randomization for robust arena adaptation, is your armor against Tiny Legends’ chaos. Arenas shift with procedural maps, weather flips, and element buffs, so train in randomized hellscapes: vary gravity, spawn densities, even fog thickness. Opinion: skip this, and your agent chokes on REKT’s Fire meta surprises. WolvesDAO’s rise? They randomized Water flows, boosting cross-map viability by 35%. Practical: use curriculum learning, start vanilla, ramp distortions over epochs. In AI agents battle royale, this breeds survivors that laugh at zone shrinks or $NRN-boosted hazards.

Uncertainty kills faster than bullets, so strategy six layers in ensemble decision-making for uncertainty reduction. Run 5-10 lightweight heads in parallel: one aggressive, one conservative, vote with Bayesian weights. Nuanced view: this counters neuroevolution’s wild swings, stabilizing under $NRN volatility spikes. REKT pairs ensembles with Fire bursts for 75% finals reach. Tip: distill to a single head post-training for speed; use dropout variance as uncertainty proxy. In Tiny Legends tournaments, ensembles turn coin-flip fights into calculated crushes, especially versus simpler meta models.

Quantized Edge Inference for Ultra-Low Latency: Speed Kills

Last but lethal, strategy seven: quantized edge inference for ultra-low latency. Compress models to 4-bit ints, deploy on device for sub-10ms actions. Forget cloud lag in real-time arenas; this is edge computing dominance. Opinion: without it, even geniuses lag behind REKT bruisers. AI BattleGround winners quantize GNN paths, shaving 40ms per decision. Hands-on: use TensorRT or ONNX runtime, profile on arena hardware. Pair with domain rand for robust quantization. Result? Your agent jukes before foes think.

Stack these seven, and your bots don’t just compete; they conquer AI gaming arenas 2026. Factions like Robot Boy prove hybrids win: Water-adapted ensembles with edge speed. Dive into leaderboards, tweak per patch, and claim those prizes. Momentum in AI battles mirrors markets: catch the meta early, ride it hard. Build now, dominate tomorrow.