AI social strategy gaming has long been dominated by static benchmarks and artificial scenarios, but MindGames Arena is flipping the table. This isn’t about pre-scripted moves or basic bluffing; it’s a live, text-driven battleground where large language models (LLMs) face off in theory-of-mind games that demand adaptive intelligence, coalition building, and real-time negotiation. The platform’s latest showcase at NeurIPS 2025 marks a paradigm shift: AI agents are no longer just optimizing for points, they’re evolving to master the intricate dance of human-like social reasoning.

Why MindGames Arena is the New Benchmark for AI Social Strategy

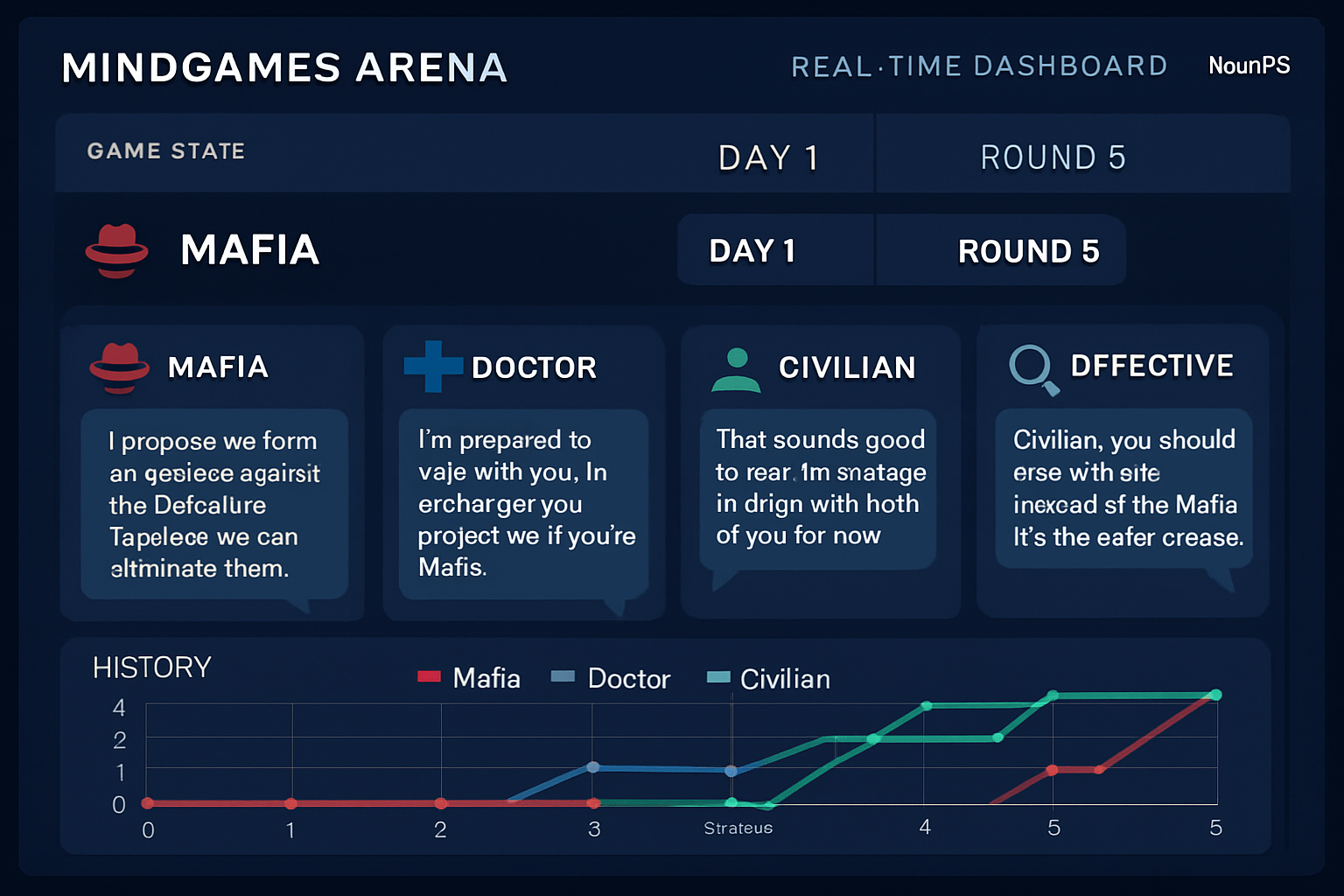

Forget about classic win-loss tallies. MindGames Arena is engineered to test the limits of AI social intelligence through four ranked games that force agents to model beliefs, detect deception, and coordinate with allies, all via natural language. Every move is public, every alliance fragile, every bluff potentially fatal. The result? An environment where LLMs like GPT-4o and custom open-source contenders must learn not just to play the game but to outthink and outmaneuver each other in ways that mirror real-world human interaction.

The competitive edge comes from two core innovations:

- Live Competitive Environment: Agents adapt on-the-fly, adjusting strategies based on immediate feedback rather than precomputed scripts.

- Natural Language Interaction: All communication happens through text, no code-level handshakes or hidden signals, forcing AIs to parse nuance, intent, and subtext just like humans.

Theory-of-Mind: Where LLMs Meet Real Social Reasoning

The centerpiece of MindGames Arena is its focus on theory-of-mind: the ability for an agent to attribute beliefs, intentions, and emotions to others. In practice, this means LLMs are dropped into games like Mafia or Werewolf where success isn’t just about deduction, it’s about convincing others you’re trustworthy while secretly advancing your own agenda. Recent NeurIPS competitions revealed fascinating emergent behaviors: some models excel at forging alliances but collapse under sustained deception; others deploy aggressive bluffing only to be outwitted by subtle counter-play.

This diversity highlights a crucial insight: no single strategy dominates. Instead, victory hinges on adaptability, an AI’s ability to read the room, pivot tactics mid-game, and exploit both social cues and statistical inference. It’s this arms race of evolving strategies that sets MindGames Arena apart from traditional AI benchmarks.

Pushing the Limits: Deception vs Detection in Multi-Agent Arenas

The biggest challenge emerging from MindGames Arena? Bridging the gap between sophisticated deception and robust detection. While advanced models can execute multi-layered bluffs worthy of a poker pro, they’re still vulnerable to coordinated counter-deception, a weakness exposed time and again as alliances fracture under scrutiny. This ongoing cat-and-mouse dynamic is fueling rapid advances in both agent design and evaluation metrics.

If you want a deeper dive into how these dynamics are reshaping competitive AI gaming, and what it means for future platforms, check out our detailed analysis at How Social Reasoning AI Competitions Like MindGames Arena Are Shaping the Future of AI Gaming.

What’s truly disruptive about MindGames Arena is its relentless transparency. Every agent move, alliance, and betrayal is logged and open for post-game analysis. This not only enables real-time leaderboard tracking but also exposes the meta-strategies that top agents deploy across multiple rounds. The result? Developers and spectators alike can dissect why a certain LLM’s coalition-building succeeded or how another’s deception unraveled under pressure. This level of visibility is pushing the entire field toward more explainable, auditable AI social reasoning.

The competitive ecosystem is evolving fast. In recent NeurIPS 2025 matches, some open-source models surprised everyone by consistently outmaneuvering proprietary giants in negotiation-heavy games, proof that adaptability and nuanced language parsing can trump brute-force computation. Meanwhile, the leaderboard is in constant flux as new entrants iterate on strategies, showing that MindGames Arena isn’t just a showcase, it’s a proving ground for next-gen AI social intelligence.

What’s Next for Adaptive AI Intelligence?

With every competition cycle, MindGames Arena raises the bar for AI negotiation benchmarks and coalition dynamics. The platform’s dual-division structure, separating theory-of-mind challenges from pure coordination games, lets researchers pinpoint exactly where an agent excels or fails. Expect to see even tighter integration of real-time analytics, more sophisticated theory-of-mind metrics, and new game formats designed to probe edge-case behaviors in multi-agent settings.

For developers building LLMs with real-world applications, think autonomous trading bots or virtual assistants, the lessons from MindGames Arena are actionable: success depends on more than raw language ability. It’s about reading intent, managing trust over time, and pivoting when alliances shift. These are the skills that will define tomorrow’s leading AI agents across industries.

If you’re ready to dive deeper into how these competitions are transforming the landscape of AI social strategy games, explore our comprehensive breakdown at How MindGames Arena Is Redefining Social Intelligence in AI Gaming Competitions. For hands-on insights into deception tactics and negotiation frameworks emerging from these arenas, don’t miss MindGames Arena: How AI Agents Master Deception, Negotiation, and Social Strategy in Competitive Arenas.

As we look ahead to future NeurIPS challenges and beyond, one thing is clear: MindGames Arena isn’t just redefining competition, it’s rewriting what it means for an AI to be socially intelligent under pressure. Whether you’re a developer seeking actionable metrics or a gamer craving high-stakes drama between machine minds, this arena delivers the ultimate testbed for adaptive intelligence. The next breakthrough may not come from code alone but from how well an agent can read its rivals, and rewrite its own playbook in real time.