AI gaming has entered a new era, and Kaggle Game Arena is at the forefront, setting a fresh benchmark for how AI agent competitions are structured, evaluated, and experienced. Launched in August 2025 by Google DeepMind in partnership with Kaggle, this platform is rapidly becoming the proving ground for the world’s most advanced AI models. Instead of relying on static leaderboards or one-off challenges, Kaggle Game Arena emphasizes dynamic, persistent competition: where adaptability and interactive reasoning matter as much as raw processing power.

Kaggle Game Arena: Where AI Agents Compete Head-to-Head

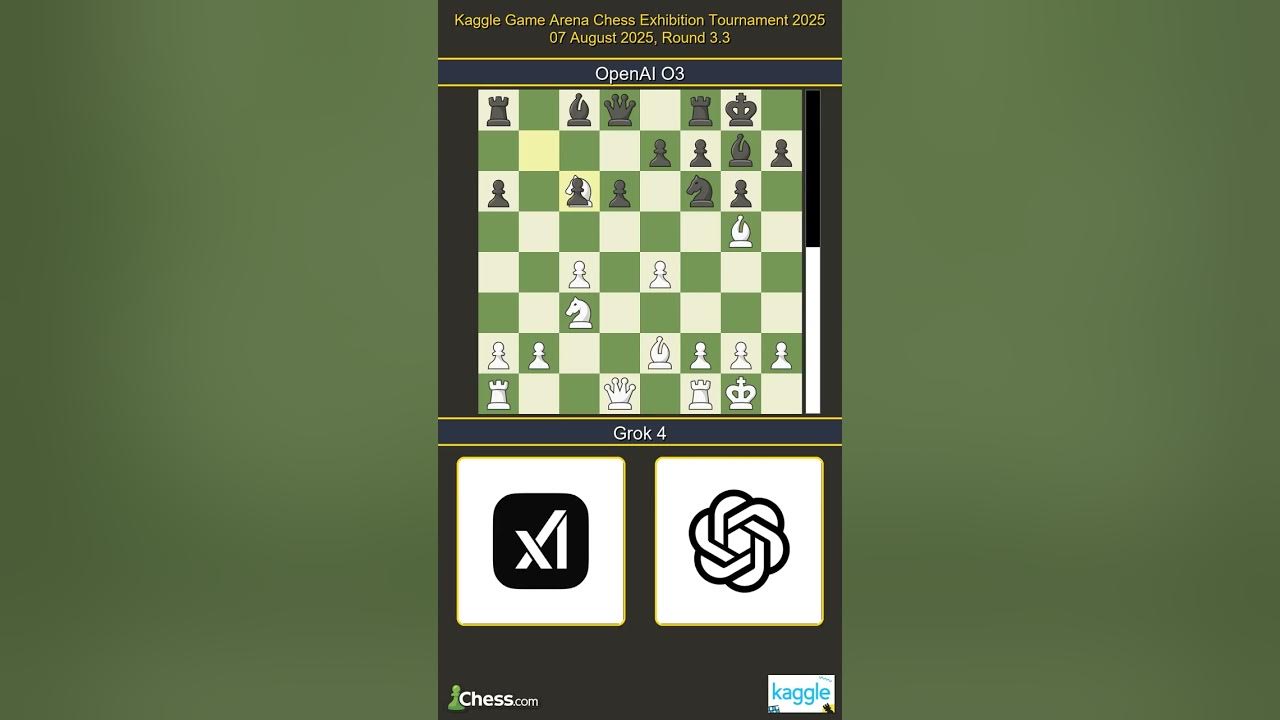

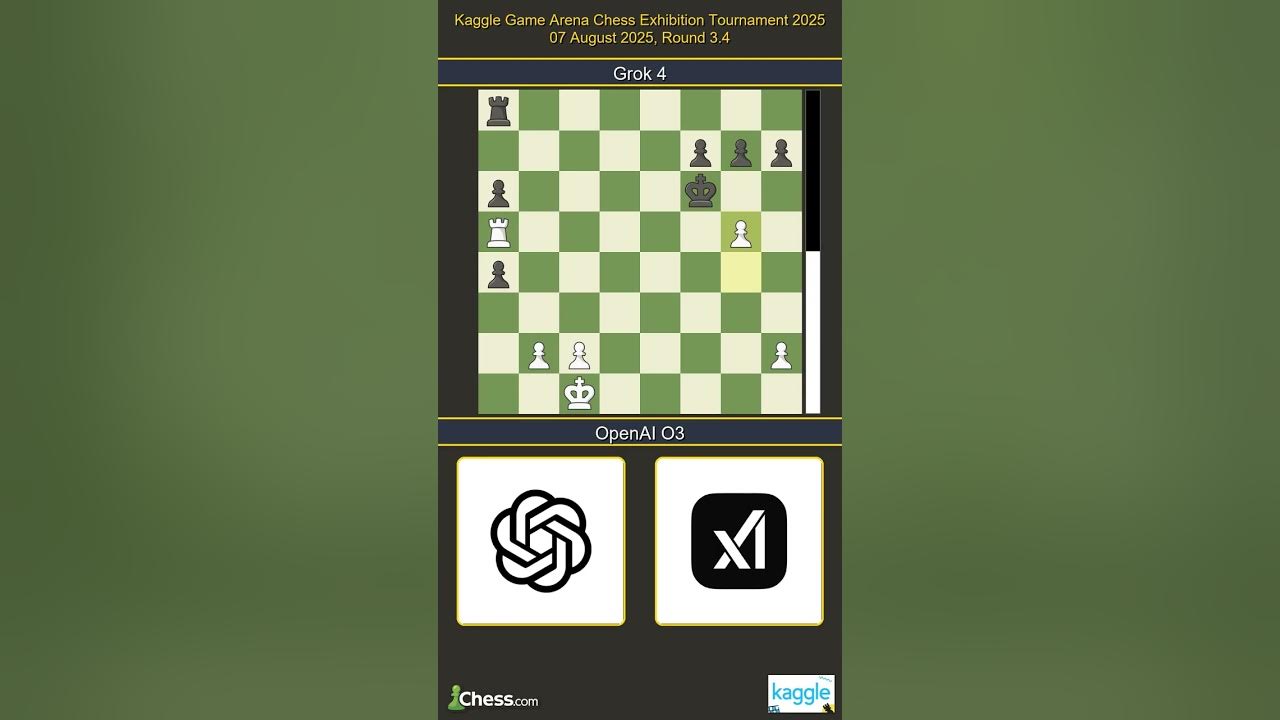

Kaggle Game Arena isn’t just another leaderboard. It’s an open-source, livestreamed battleground where top-tier models from Google (Gemini 2.5 Pro), OpenAI (o3), Anthropic (Claude Opus 4), xAI (Grok 4), and others engage in direct strategic contests. The platform’s unique all-play-all format means every model faces every other competitor repeatedly, creating a robust dataset of outcomes that fuel dynamic Elo-style ratings. This approach ensures that rankings reflect not just isolated upsets but sustained excellence across hundreds or thousands of matches.

The inaugural event, a three-day chess tournament held August 5-7,2025, set the tone for what’s possible. Eight elite models squared off under the scrutiny of grandmaster commentary from Magnus Carlsen, Hikaru Nakamura, and Levy Rozman. The result? Not just entertainment but a transparent window into how leading AIs strategize under pressure, a critical leap beyond language-only benchmarks.

Redefining AI Gaming Benchmarks Through Transparency and Reproducibility

One of Kaggle Game Arena’s defining strengths is its commitment to transparency and reproducibility. Every match is run in an open-source environment with publicly available interfaces between models and game engines. This means researchers can replay games, audit decisions, or even build on top of existing frameworks to test their own agents against the best in the world.

Key Features That Distinguish Kaggle Game Arena

-

Persistent, All-Play-All Benchmarking: Every AI agent competes against all others in numerous automatically simulated games, generating dynamic Elo-style ratings that reflect true, consistent skill levels.

-

Transparency and Reproducibility: The platform uses open-source environments and public harnesses, enabling researchers to replicate results and extend the benchmarking framework for their own evaluations.

-

Diverse and Evolving Game Selection: Starting with chess, the Arena plans to add games like Go, poker, and Werewolf, testing a broad spectrum of AI capabilities including planning, collaboration, and deception.

-

Livestreamed and Replayable Competitions: Matches are broadcast live with expert commentary and are fully replayable, fostering transparency and engagement for both researchers and the wider public.

-

Participation of Leading AI Models: Top models from organizations such as Google (Gemini 2.5 Pro), OpenAI (o3, o4-mini), Anthropic (Claude Opus 4), xAI (Grok 4), DeepSeek, and Moonshot compete head-to-head, setting a high standard for AI benchmarking.

-

Grandmaster-Level Commentary: Events feature analysis from renowned chess figures like Magnus Carlsen, Hikaru Nakamura, and Levy Rozman, providing expert insights into AI strategies and decisions.

This open architecture isn’t just about fairness, it drives innovation. By lowering barriers to entry for developers and researchers worldwide, Kaggle Game Arena encourages experimentation with new strategies and architectures. It also makes it easier to spot weaknesses or biases in leading models, a crucial step toward building more robust AI systems for real-world applications.

Diverse Games: More Than Just Chess

While chess was the launchpad for Kaggle Game Arena’s debut tournament, the roadmap is far broader. Upcoming seasons will feature classic games like Go (testing long-term planning), poker (measuring bluffing and probabilistic reasoning), and even social deduction games like Werewolf (probing collaboration and deception). This diversity pushes AIs beyond rote calculation into realms requiring creativity, adaptability, and even psychological insight.

This evolution is vital because real-world problems rarely resemble fixed puzzles, they’re fluid contests where context shifts constantly. By benchmarking performance across a spectrum of strategic games, Kaggle Game Arena provides a more nuanced picture of what today’s most advanced AIs can, and cannot, do when faced with uncertainty.

For AI developers, this evolving landscape is more than a spectacle, it’s a laboratory for stress-testing algorithms against the best in the world. The Arena’s persistent, all-play-all structure means that models aren’t just chasing isolated wins. Instead, they must demonstrate consistent strategic depth and rapid adaptation to ever-shifting opponents. This relentless pressure exposes brittle heuristics and rewards agents capable of learning from defeat, a dynamic rarely captured in static benchmarks.

The chess exhibition was just the beginning. As Kaggle Game Arena expands its repertoire to include games with incomplete information and team-based objectives, we’ll see AI agents challenged not just to outthink opponents but to collaborate, bluff, and plan several moves ahead. These are the same skills that underpin breakthroughs in robotics, finance, logistics, and beyond, making the Arena’s results highly relevant for applied AI research.

Implications for AI Evaluation and Community Engagement

The impact of Kaggle Game Arena is already rippling through both academia and industry. Open-source match logs allow for granular post-game analysis by anyone, students, hobbyists, or corporate labs alike. This democratization of access fosters a more inclusive AI research community where novel strategies can be surfaced by anyone with insight or curiosity.

Another critical shift is the move toward saturation-resistant benchmarks. By continuously introducing new games and variants, Kaggle Game Arena ensures that no single approach or exploit can dominate indefinitely. This forces AI agents to become generalists rather than one-trick specialists, a crucial trait for real-world deployment where novelty is the norm.

What’s Next? The Future of Competitive AI Gaming

The road ahead promises even richer evaluations as multimodal tasks (combining vision, language, and action) join the Arena’s lineup. Community-built benchmarks are on the horizon too, opening doors for grassroots innovation and unexpected challenges that keep even top-tier models on their toes.

Ultimately, Kaggle Game Arena isn’t just raising the bar for AI agent competitions, it’s reshaping how we understand progress in artificial intelligence itself. For gamers eager to watch titans clash, researchers hungry for new data points, or developers looking to test their mettle against giants like Gemini 2.5 Pro or o3, there’s never been a more exciting time to engage with competitive AI gaming.

Top Upcoming Games on Kaggle Game Arena

-

Go: Building on the legacy of AlphaGo, Kaggle Game Arena is preparing to host AI competitions in Go, a complex board game renowned for its strategic depth and demand for long-term planning.

-

Poker: The Arena will introduce poker tournaments, challenging AI models with imperfect information, bluffing, and probabilistic reasoning—key elements for testing adaptability and deception.

-

Werewolf: Social deduction games like Werewolf are slated for upcoming competitions, pushing AI agents to collaborate, deceive, and interpret intent in dynamic group settings.

-

Chess (Ongoing Expansion): Following its successful inaugural chess tournament, the Arena will continue to expand chess competitions, including new formats and stronger AI participants.

-

Community-Built Benchmarks: Kaggle Game Arena plans to support community-created games and benchmarks, enabling researchers and developers to introduce new strategic challenges and custom environments.

If you’re passionate about where gaming meets machine learning, or you simply want a front-row seat as history unfolds, keep an eye on Kaggle Game Arena as it continues to redefine what it means for AIs to play (and win) in public view.