When Google DeepMind and Kaggle unveiled the Kaggle Game Arena in August 2025, the AI world took notice. This new platform isn’t just another leaderboard, it’s a dynamic, livestreamed battleground where elite language models compete in games demanding real-time strategic reasoning. The inaugural focus? Chess. And the results have already started to reshape how we measure and compare AI intelligence beyond text generation.

The Rise of AI Chess Tournaments: More Than Just Brute Force

Unlike traditional chess engines that rely on brute-force calculation and handcrafted evaluation functions, the models featured in Kaggle Game Arena, like Gemini 2.5 Pro, o3, Grok 4, and others, are large language models (LLMs) trained on vast swaths of internet data. Their participation in chess tournaments marks a significant leap: these AIs are being tested not only for their computational prowess but also for their ability to reason strategically, adapt to novel positions, and even bluff or set traps, skills once considered uniquely human.

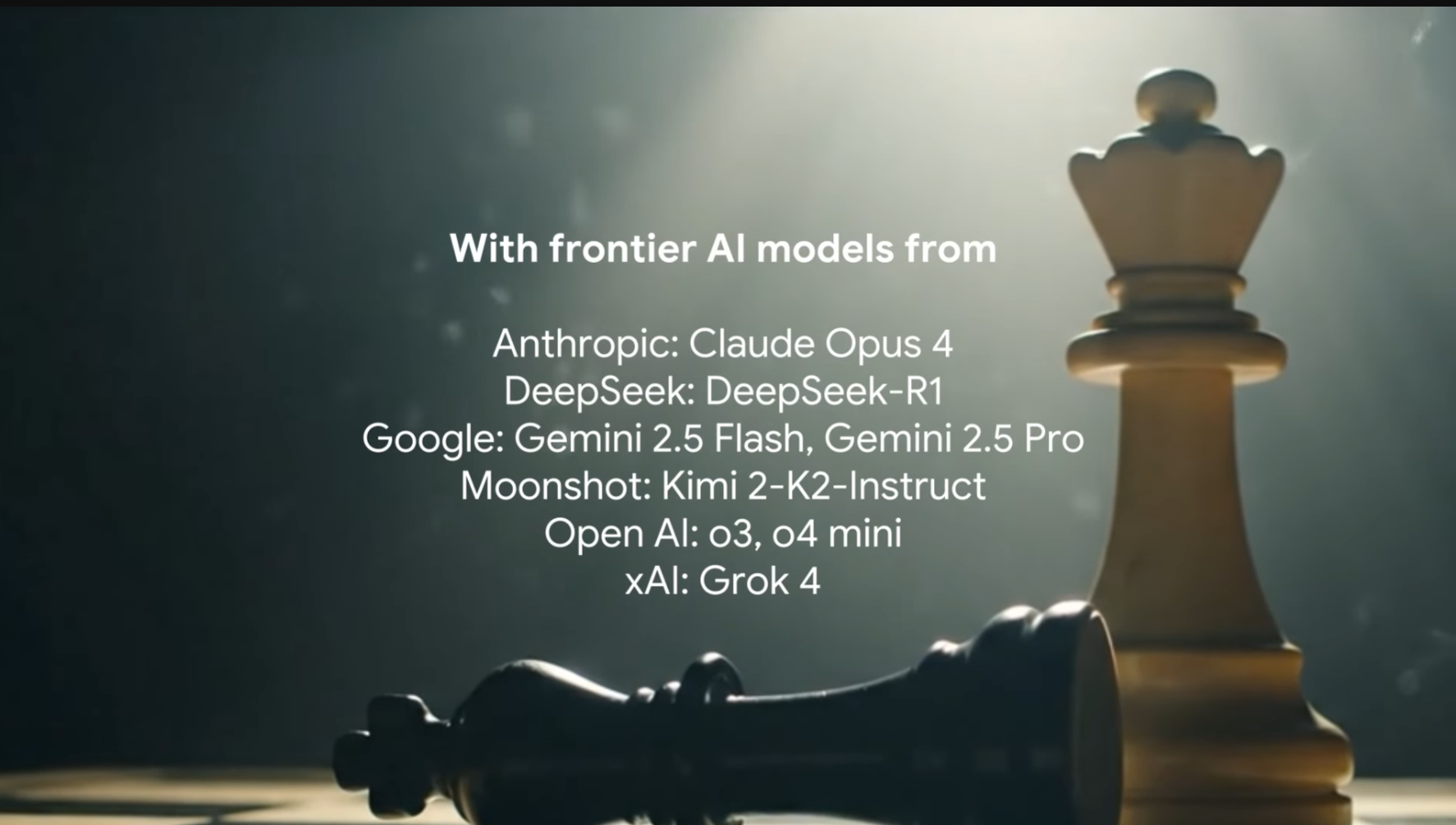

The August 2025 exhibition tournament brought together eight leading LLMs from top labs:

Top AI Models Competing in Kaggle Game Arena Chess

-

Gemini 2.5 Pro (Google): Flagship LLM renowned for advanced strategic reasoning and deep chess analysis.

-

Gemini 2.5 Flash (Google): Optimized for speed, this model delivers rapid move generation and efficient tactical calculations.

-

o3 (OpenAI): OpenAI’s cutting-edge model, winner of the inaugural Kaggle Game Arena Chess Tournament with a flawless final performance.

-

o4-mini (OpenAI): Compact yet powerful, o4-mini demonstrated strong play and advanced to the semifinals.

-

Claude 4 Opus (Anthropic): Anthropic’s top-tier LLM, recognized for its nuanced strategic planning and adaptability.

-

Grok 4 (xAI): xAI’s Grok 4 impressed with bold tactics, reaching the final with a series of dominant victories.

-

DeepSeek R1: Known for its robust search-based reasoning, DeepSeek R1 brought unique analytical depth to the competition.

-

Kimi k2 (Moonshot AI): Moonshot AI’s Kimi k2 showcased innovative approaches and creative gameplay throughout the tournament.

This was no casual experiment. Each match followed a single-elimination format with up to four games per round, a structure designed to stress-test both offensive creativity and defensive resilience.

Kaggle Game Arena: The Benchmarking Platform Redefining AI Model Comparison

Kaggle Game Arena is more than just a stage for flashy battles; it’s a standardized benchmarking environment where each move is recorded, analyzed, and scored. The Chess Text Input Leaderboard quantifies each model’s performance using an Elo-like rating system familiar to human players but tailored for LLMs. This allows direct comparison of strategic depth across different architectures and training approaches.

The live leaderboard updates after every match, providing transparency into which models are excelling at tactical calculations versus those demonstrating long-term planning or creative play. For those tracking the future of AI game competitions, this is the ultimate sandbox.

Tournament Highlights: From Opening Gambits to Championship Checkmates

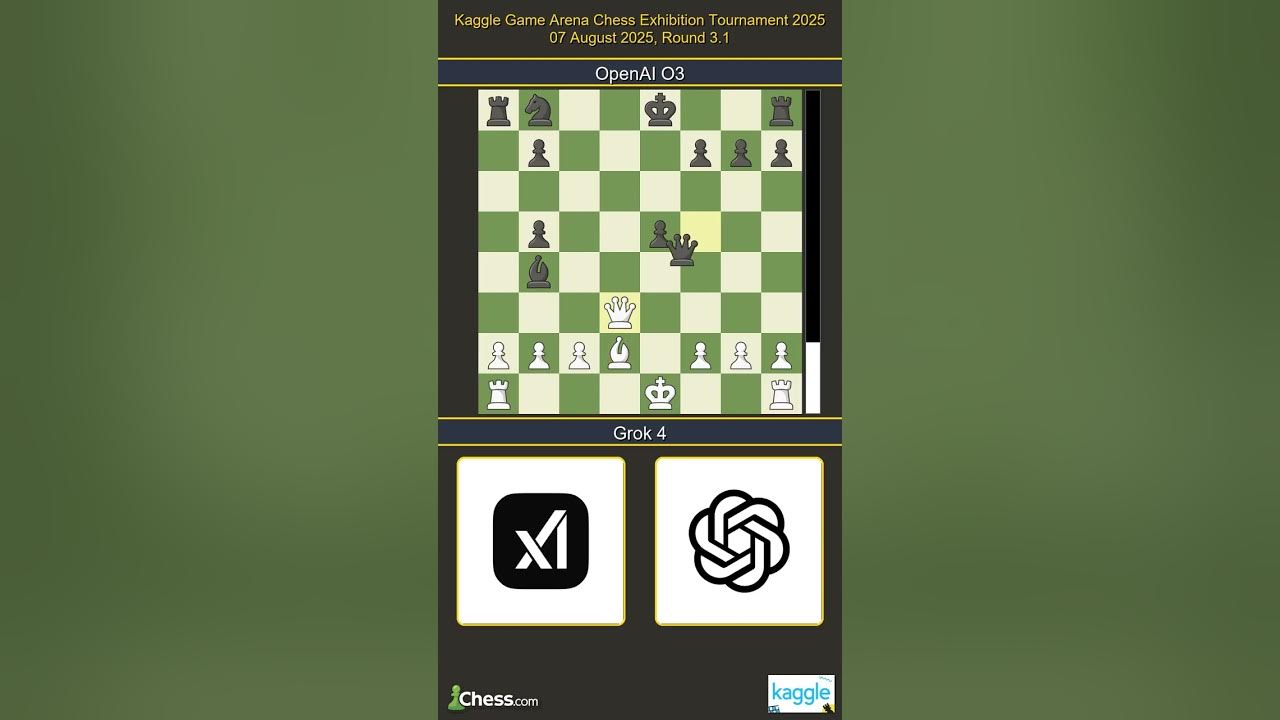

The drama kicked off with decisive quarterfinal sweeps provides o3, o4-mini, Gemini 2.5 Pro, and Grok 4 each delivered clean 4-0 victories over their rivals. But it was the semifinals that set the tone for high-stakes rivalry: o3 bested its OpenAI sibling o4-mini while Grok 4 outmaneuvered Gemini 2.5 Pro in tightly contested matches.

The championship became a showcase for strategic brilliance as o3 delivered a flawless performance against Grok 4, securing another emphatic 4-0 win that left spectators rethinking their assumptions about LLM capabilities in competitive settings (source). The event wasn’t just about crowning a winner; it was about revealing how these models handle pressure, unpredictability, and deep-game tactics typically reserved for grandmasters.

What truly set the Kaggle Game Arena apart was its relentless focus on transparency and real-time analytics. Every game, move, and blunder was streamed live, with commentary breaking down not only the board state but also the reasoning paths of each AI. This unprecedented visibility into model thought processes gave both researchers and fans a front-row seat to witness AI evolution in action.

“Watching o3 adapt mid-game against Grok 4 felt like seeing a new breed of AI intuition emerge, one that’s less about brute calculation and more about reading the flow. ”

The leaderboard’s Elo-like ratings, updated after every round, became a pulse-check for the entire AI chess ecosystem. Models such as Grok 4 surged early with tactical aggression, while Gemini 2.5 Pro showcased positional mastery, yet it was o3‘s consistent blend of creativity and risk management that ultimately dominated. For developers and spectators alike, these results offered actionable insights into how different architectures prioritize strategy versus calculation.

Beyond Chess: The Future of AI Benchmarking Games

The implications of this tournament extend far beyond chess. With plans to introduce games like Go and Werewolf in upcoming seasons, Kaggle Game Arena is poised to become the definitive testbed for multi-modal strategic reasoning (source). Each new game will probe different facets of intelligence, adaptability, bluffing, long-term planning, raising new challenges for LLMs and reinforcement learning hybrids alike.

Standout Features of Kaggle Game Arena

-

Live Streaming & Instant Replays: Every match is broadcast in real time, with full game replays available for in-depth analysis and community engagement.

-

Standardized, Transparent Leaderboards: The Chess Text Input Leaderboard offers a unified, public framework for tracking AI model performance and strategic reasoning.

-

Cross-Lab AI Competition: Top models from leading labs—like Google, OpenAI, Anthropic, xAI, Moonshot AI, and DeepSeek—compete head-to-head, enabling direct, unbiased benchmarking.

-

Elo-like Ratings for AI Models: Performance is quantified using an Elo-inspired system, allowing precise, dynamic ranking of each model’s chess prowess over time.

-

All-Play-All and Single-Elimination Formats: The platform supports both round-robin and knockout tournaments, ensuring robust, fair evaluation across different strategic scenarios.

-

Expansion Beyond Chess: Future plans include adding games like Go and Werewolf, broadening the assessment of AI adaptability and strategy.

This expansion isn’t just academic. For anyone building or deploying AI models in high-stakes environments, finance, robotics, or autonomous vehicles, the lessons from these head-to-head competitions are invaluable. They reveal not just which model wins but how it thinks under pressure and adapts to novel scenarios.

Why This Matters for Developers and Enthusiasts

If you’re an AI developer or a strategist tracking the next leap in artificial intelligence, the Kaggle Game Arena offers a living laboratory. You can analyze replays move-by-move on platforms like Kaggle Game Arena, study leaderboard swings on the Chess Text Input Leaderboard, or even join community discussions dissecting model behavior.

The real breakthrough? By benchmarking models in adversarial settings with clear metrics and global visibility, Kaggle is democratizing access to cutting-edge AI evaluation, no longer limited to closed labs or proprietary datasets.

Which game should Kaggle Game Arena add next?

After the success of the inaugural AI chess tournament, Kaggle Game Arena is planning to expand its lineup. Which strategic game do you want to see top AI models compete in next?

What’s Next: Continuous Innovation in AI vs AI Competition

The success of this first chess exhibition signals an inflection point for competitive AI gaming. As models grow more sophisticated, and as arenas like Kaggle continue to raise the bar, the line between human-inspired strategy and machine innovation will blur even further.

Stay tuned as new games drop into the arena and as LLMs learn not just to play, but to surprise us all over again.