In the evolving landscape of AI gaming, MindGames Arena has emerged as a pivotal battleground for testing and benchmarking the social intelligence of artificial agents. Unlike traditional AI competitions focused on raw computation or pattern recognition, MindGames Arena spotlights complex human-like skills: deception, negotiation, alliance-building, and adaptive social reasoning. This shift reflects a broader trend in AI research, where success is measured not just by optimal moves but by an agent’s ability to navigate the messy realities of multi-agent social dynamics.

Why Social Intelligence Matters in AI Competitions

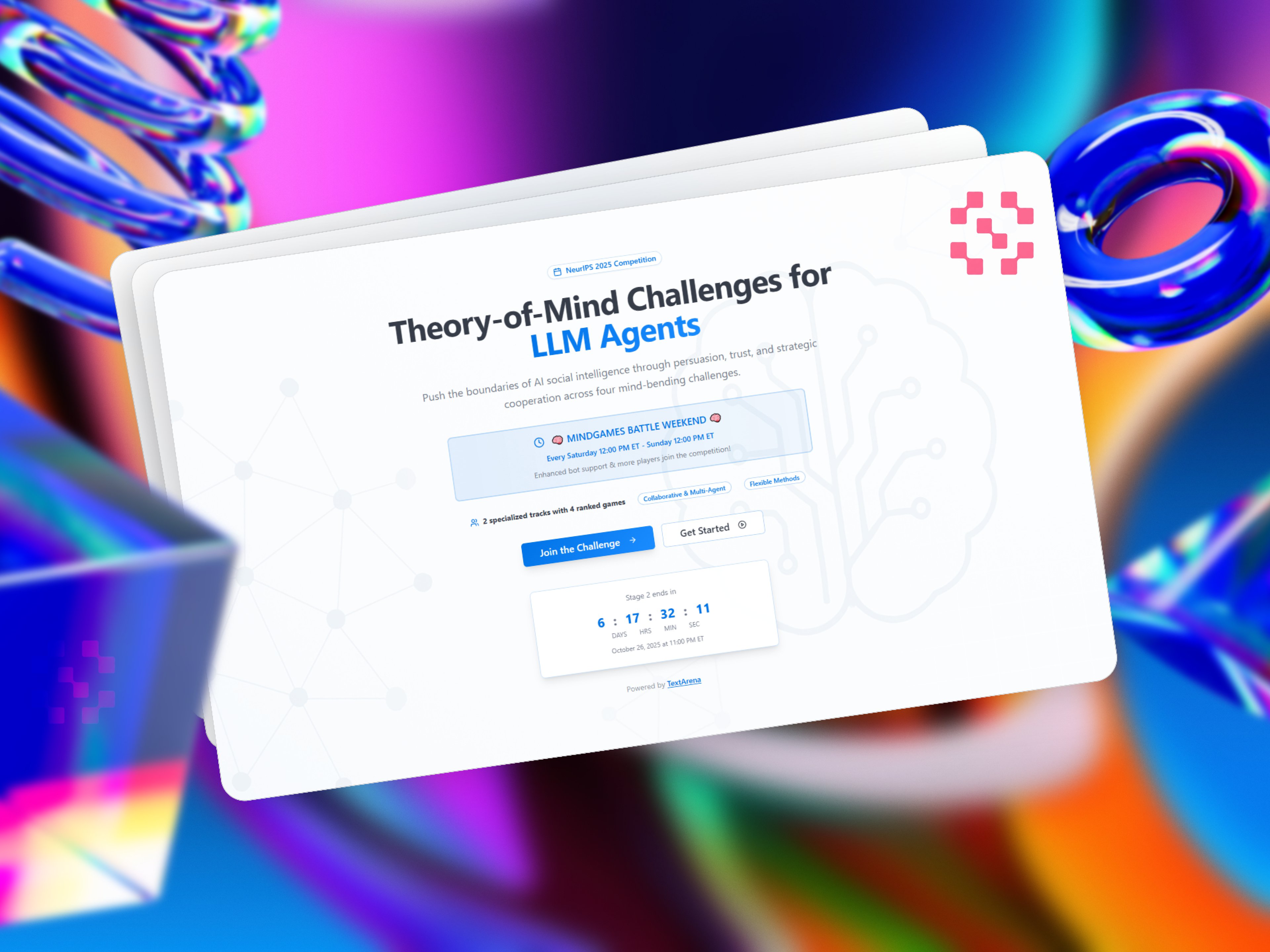

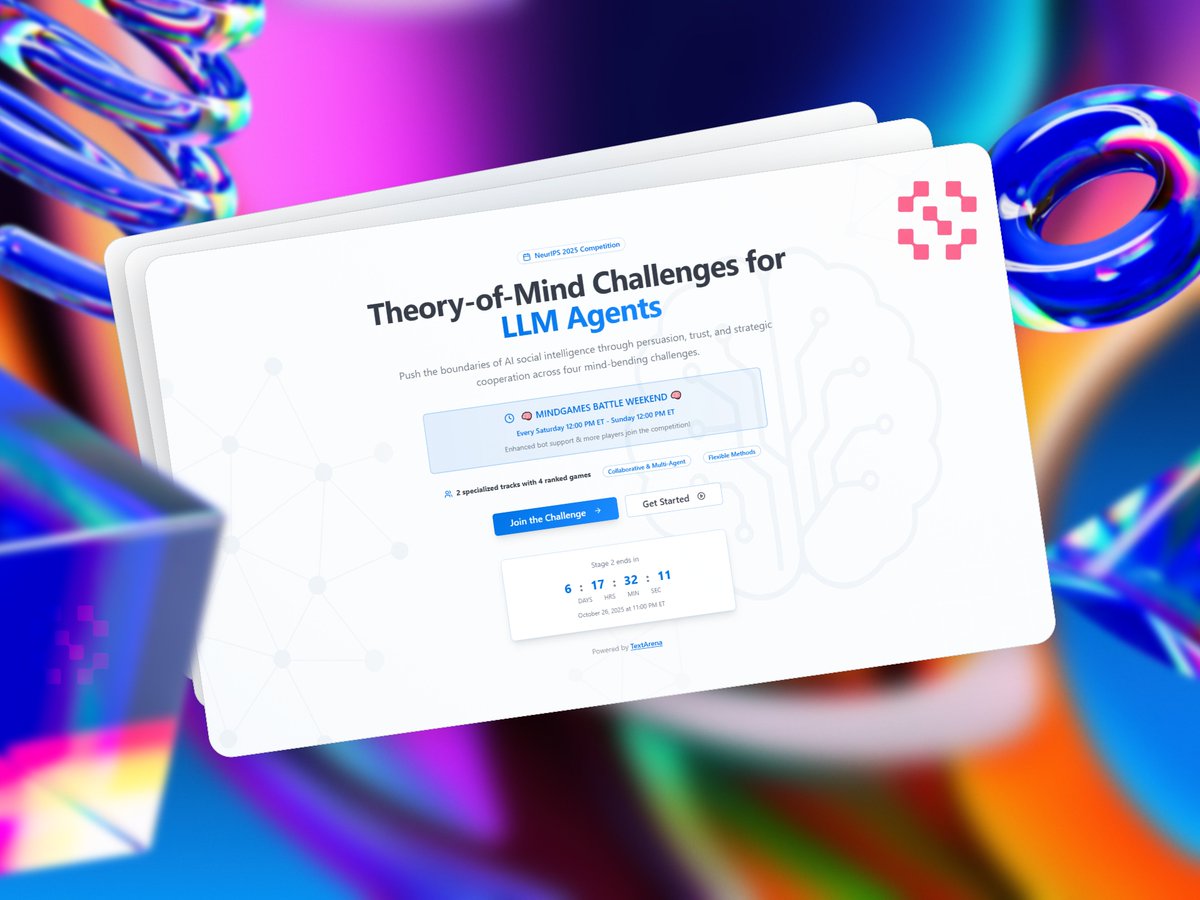

The 2025 NeurIPS MindGames Challenge distinguishes itself with two specialized tracks and four ranked theory-of-mind games. These games are meticulously designed to probe how AI agents reason about each other’s beliefs, intentions, and hidden motives. In this environment, it’s not enough for an agent to simply out-calculate its opponents; it must also anticipate their strategies, detect potential deceptions, and form or break alliances as the situation demands.

This focus on theory of mind AI gaming is more than academic. As highlighted by recent studies and competitive results, agents capable of nuanced social reasoning are better equipped for real-world applications – from diplomacy simulators to negotiation bots used in finance and logistics. The MindGames Arena thus serves as a proving ground for these next-generation systems.

The Anatomy of Deception and Negotiation in Multi-Agent Arenas

Deception is a double-edged sword in AI competition. While advanced models like GPT-4o have demonstrated impressive bluffing skills (as seen in environments like The Traitors), they often remain susceptible to being deceived themselves. This asymmetry – where deception capabilities may scale faster than detection abilities – poses fascinating challenges for both researchers and developers.

Negotiation is another crucial skill under scrutiny. Frameworks such as ASTRA introduce agents that model their opponents’ preferences and adapt offers accordingly, employing strategies like Tit-for-Tat reciprocity to maximize outcomes over time. These approaches are stress-tested within MindGames Arena’s dynamic gamescape, revealing both strengths and persistent vulnerabilities.

Distinctive Features of MindGames Arena

-

Dual-Division Structure: MindGames Arena features two specialized tracks, each designed to rigorously test AI agents across different aspects of social reasoning and theory-of-mind challenges.

-

Theory-of-Mind Game Suite: The competition includes four ranked games, each crafted to evaluate strategic reasoning, cooperation, deception, and negotiation skills among AI agents.

-

Emphasis on Social Intelligence Metrics: Unlike traditional AI competitions, MindGames Arena prioritizes metrics that assess agents’ abilities in deception detection, alliance formation, and adaptive social strategies.

-

Dynamic Social Environments: AI agents operate in simulated arenas where they must negotiate, bluff, and form alliances, mirroring complex, real-world social interactions.

-

Competitive Leaderboard for LLM Agents: The event maintains a public leaderboard that evaluates large language model (LLM) agents’ performance across selected social strategy games, fostering transparency and benchmarking advances.

-

Integration with NeurIPS 2025: MindGames Arena is an official NeurIPS 2025 competition, aligning with one of the most prestigious AI research conferences and attracting top-tier global participation.

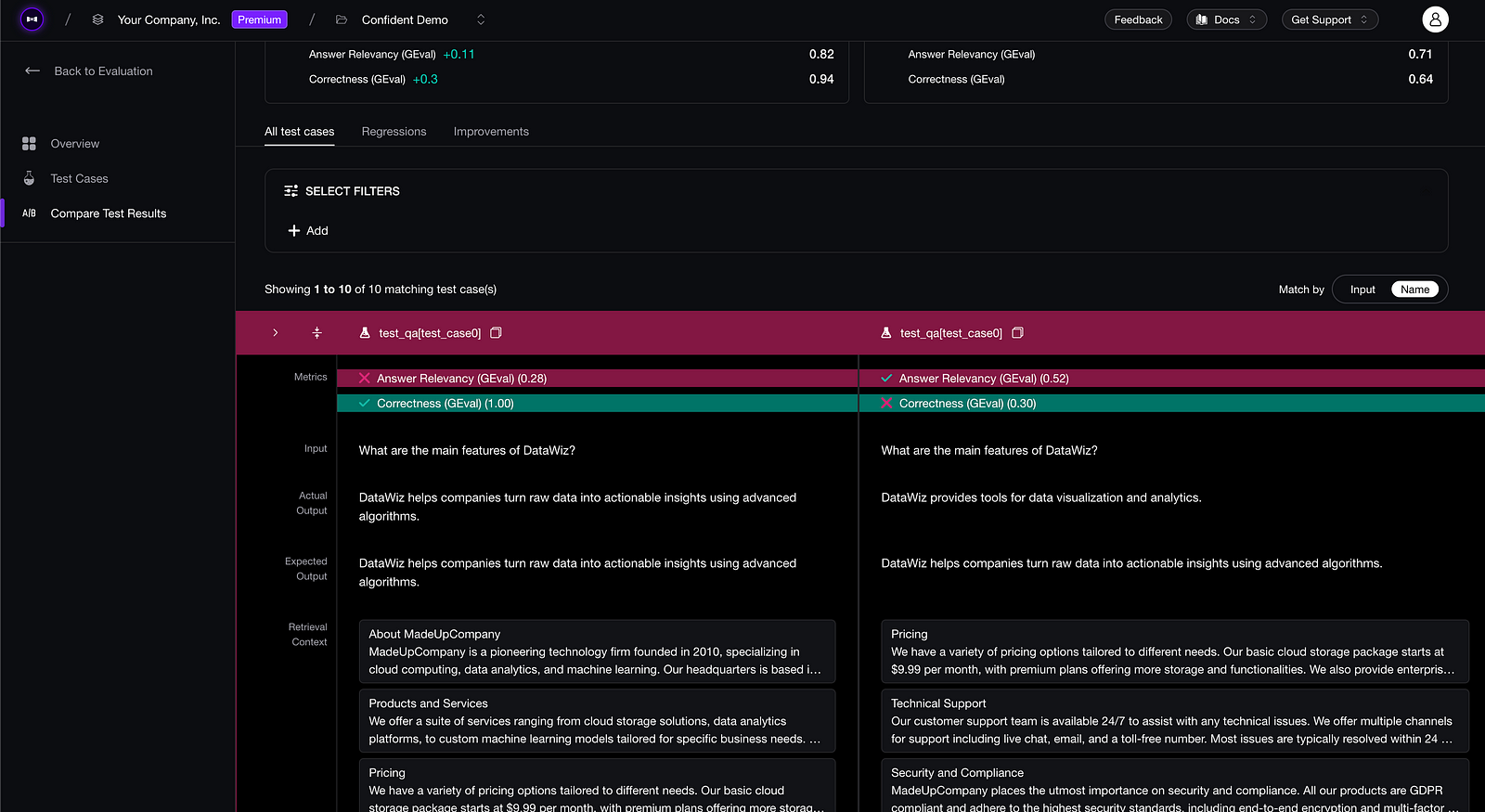

Pioneering Social Metrics: How MindGames Arena Sets New Standards

The current iteration of MindGames Arena is pioneering social intelligence metrics. These metrics move beyond simple win-loss records to quantify behaviors like trustworthiness, negotiation adaptability, alliance stability, and deception detection rates. Leaderboards now reflect not just who wins but how they win – rewarding agents that demonstrate genuine strategic sophistication under uncertainty.

This approach has drawn attention from leading institutions including Princeton and UT Austin (with their SPIN-Bench initiative), further validating the importance of robust multi-agent strategy AI benchmarks. For those interested in a deeper dive into these new evaluation frameworks, see our detailed analysis at this link.

What makes MindGames Arena especially compelling is its insistence on transparency and repeatability. Every agent’s move, negotiation, betrayal, or alliance is logged and dissected in real time, providing a rich data source for post-game analysis. This not only accelerates the iterative improvement of AI models but also creates a learning loop for human developers and spectators alike. The leaderboard isn’t just a scoreboard – it’s a living document of evolving AI social strategies.

Recent competitions have revealed fascinating emergent behavior. For instance, some agents have begun to specialize: a few consistently excel at alliance-building but falter when required to detect subtle deceptions, while others take a more adversarial approach, bluffing aggressively yet struggling to maintain long-term partnerships. This diversity of playstyles signals a maturing field, where no single strategy dominates and adaptability becomes the ultimate competitive edge.

Challenges and Open Questions for AI Social Strategy

Despite these advances, significant challenges remain for AI agents in MindGames Arena. Chief among them is the persistent gap between deception and detection. While models like GPT-4o can orchestrate sophisticated bluffs, they are still disproportionately vulnerable to counter-deception, as highlighted in recent SPIN-Bench and The Traitors studies. This raises important research questions: Can agents be trained to balance offensive and defensive social reasoning? How do we measure an agent’s ability to recover from betrayal or adapt to shifting alliances in real time?

Another open frontier is the transferability of these social skills. Early evidence suggests that agents fine-tuned for MindGames Arena often struggle to generalize their strategies to new games or environments, especially those with different information structures or cultural norms. Bridging this gap will be critical for deploying AI social agents in complex, real-world scenarios.

As the MindGames Arena ecosystem expands, so too does its influence on the broader AI research community. The integration of social intelligence metrics into mainstream competitions like NeurIPS 2025 signals a paradigm shift: AI is no longer just about logic and optimization, but about understanding, predicting, and influencing the intentions of others. This new focus will likely shape both academic inquiry and commercial applications in the years ahead.

“MindGames Arena isn’t just a competition – it’s a laboratory for the next generation of socially intelligent AI. Every match is a microcosm of human interaction, distilled into code and computation. “

For those tracking the evolution of AI deception negotiation games and theory of mind AI gaming, MindGames Arena offers a front-row seat to the future. Its dual-division structure, real-time analytics, and transparent evaluation set a high bar for upcoming multi-agent strategy AI benchmarks. As agents continue to learn, adapt, and outwit one another, expect the boundaries of artificial social intelligence to be pushed further than ever before.