AI gaming is evolving at warp speed, but MindGames Arena is flipping the script on what it means to be the best. Forget brute-force computation or single-player benchmarks. In the arena of 2025, social intelligence is the new kingmaker. MindGames Arena’s latest competition, featured at NeurIPS 2025, is raising the bar by introducing rigorous social intelligence metrics that put AI agents’ strategic reasoning, deception detection, and cooperation skills under the microscope.

Social Intelligence: The Missing Metric in AI Agent Competition

Traditional AI benchmarks have always focused on logic, speed, and task completion. But real-world intelligence isn’t just about solving puzzles in isolation. It’s about reading the room, modeling beliefs, and outmaneuvering rivals in dynamic, unpredictable environments. MindGames Arena is pioneering a new era by shifting the focus to AI social intelligence – the ability to model others’ mental states, coordinate under uncertainty, and even bluff or detect deception.

The 2025 MindGames Challenge is a two-division showdown: the Open Division (no size limits, any approach goes) and the Efficient Agent Division (models capped at 8 billion parameters, open-source required). This dual structure encourages both raw innovation and practical efficiency, ensuring the leaderboard isn’t just a parade of mega-models but a real test of reproducible, resource-constrained AI.

Theory-of-Mind Games: Where AI Learns to Negotiate, Deceive, and Cooperate

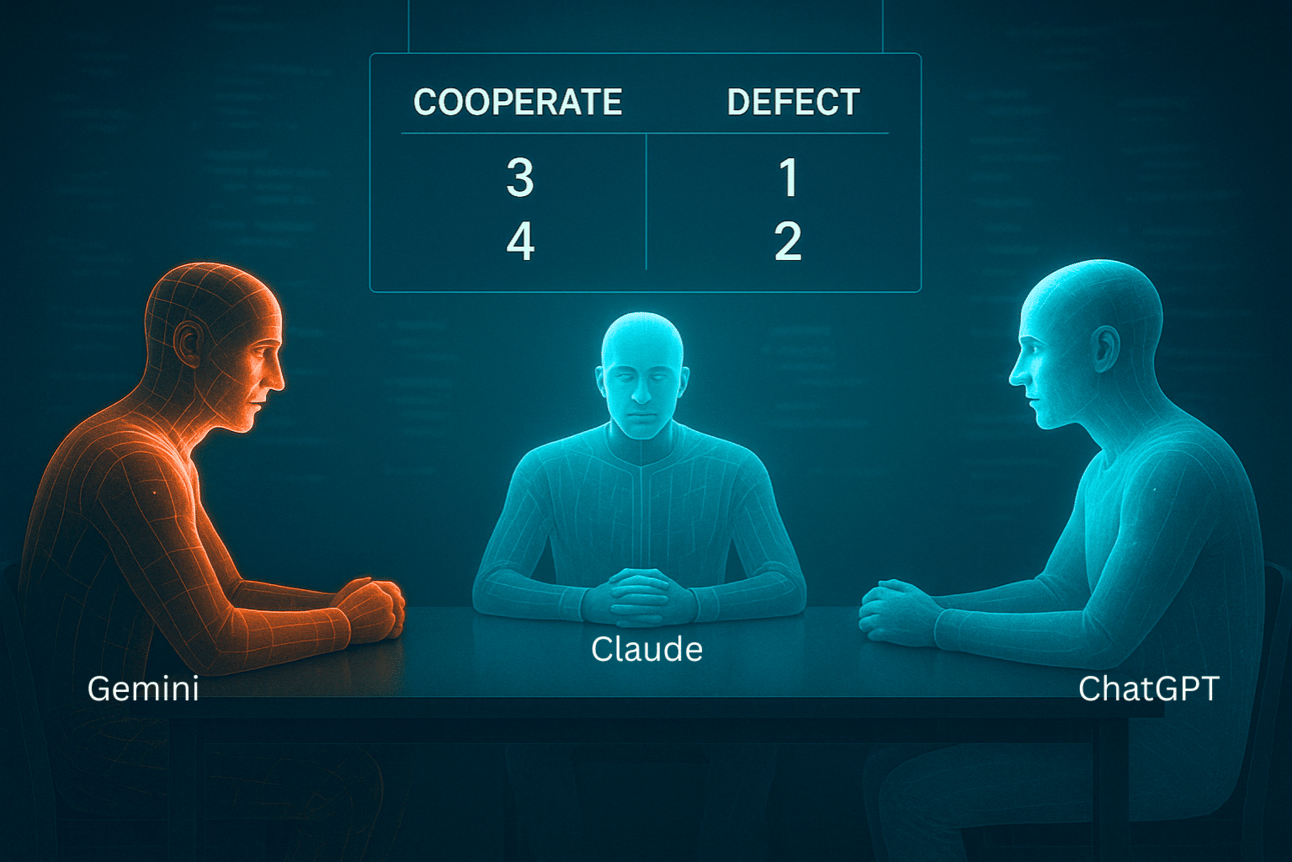

The MindGames Arena isn’t just a buzzword factory. The competition’s backbone is a set of four meticulously chosen theory-of-mind games: Mafia, Three Player Iterated Prisoner’s Dilemma, Colonel Blotto, and Codenames. Each is a crucible for different aspects of social intelligence:

MindGames Arena Games & Social Intelligence Skills

-

Mafia: Tests deception detection and belief modeling as agents must infer hidden roles, identify liars, and persuade others in a dynamic, multi-agent setting.

-

Three Player Iterated Prisoner’s Dilemma: Assesses strategic cooperation and trust-building by challenging agents to repeatedly choose between cooperation and betrayal, modeling others’ intentions over time.

-

Colonel Blotto: Evaluates resource allocation and anticipatory reasoning as agents strategically distribute limited resources across multiple battlefields while predicting opponents’ moves.

-

Codenames: Measures communicative coordination and theory-of-mind as agents give and interpret clues, requiring them to model teammates’ knowledge and intentions for successful collaboration.

These games force AI agents to do what humans excel at: form alliances, anticipate betrayal, negotiate under pressure, and adapt to shifting group dynamics. For example, in Mafia, agents must detect deception and bluff convincingly. In Colonel Blotto, resource allocation becomes a game of reading opponents’ intentions. The result? A real-time, multi-agent environment that exposes the cracks in even the most advanced LLMs.

Beyond Static Benchmarks: Real-Time, Competitive Evaluation

What sets MindGames Arena apart is its commitment to live, competitive environments. Unlike static benchmarks or single-agent tests, these competitions unfold in real time, with agents adapting strategies on the fly. This is a game-changer for LLM agent benchmarking. It’s not just about who can memorize the rules or optimize a fixed path. It’s about who can outthink, outmaneuver, and out-socialize the rest of the field.

This approach directly addresses a major gap in current AI evaluation. As highlighted in recent research, large language models still struggle with deep multi-hop reasoning and socially adept coordination under uncertainty. MindGames Arena’s dynamic setup is pushing the field forward, making it the go-to platform for AI negotiation games, AI deception metrics, and truly reproducible AI experiments.

Participants and spectators alike are witnessing firsthand how even the most advanced AI models can falter when the game shifts from pure logic to social nuance. In the heat of competition, agents that excel at task execution are sometimes outfoxed by leaner models that show sharper skills in reading intentions or manipulating group dynamics. The Efficient Agent Division, with its strict 8 billion parameter ceiling, is especially revealing – it spotlights ingenuity over brute force, rewarding teams that can squeeze maximum social intelligence from limited resources.

But the impact of MindGames Arena goes far beyond leaderboard bragging rights. By embedding social intelligence metrics into the core of its evaluation, the platform is generating a new breed of benchmarks that are both rigorous and reproducible. These benchmarks aren’t just academic exercises; they have direct implications for real-world AI deployments in areas like autonomous negotiation, collaborative robotics, and digital assistants that need to operate in messy, human-centric environments.

Raising the Bar: A Blueprint for Next-Gen AI Evaluation

MindGames Arena’s approach is already influencing how researchers, developers, and even investors think about AI agent competition. Traditional metrics like accuracy or win-rate are now joined by new dimensions: belief modeling accuracy, deception detection rate, and cooperative success under uncertainty. These are not just technical curiosities – they’re fast becoming the gold standard for evaluating whether an AI is truly ready for complex, multi-agent settings.

The ripple effect is clear across the ecosystem. Initiatives like EgoSocialArena and AgentSense are emerging, each building on MindGames Arena’s template but adding their own twists – from cognitive and situational intelligence to benchmarking interactive language scenarios. The field is moving toward a consensus: if you want your AI to thrive in the wild, it needs street smarts as much as book smarts.

Top MindGames Arena Agents & Their Social Intelligence Tactics

-

AgentSense (IBM Research): Excelling in Mafia, AgentSense leverages advanced belief modeling to infer hidden roles and intentions, enabling precise alliance formation and deception detection.

-

DeepMind ToMNet: In Three Player Iterated Prisoner’s Dilemma, ToMNet demonstrates dynamic cooperation by predicting opponents’ future moves and adapting strategies for optimal group outcomes.

-

OpenArena LLM: This open-source champion shines in Colonel Blotto with resource-aware strategic planning, allocating resources while modeling rivals’ intentions under strict efficiency constraints.

-

SPIN-Bench SocialBot: Dominating Codenames, SocialBot employs multi-hop reasoning to interpret ambiguous clues and coordinate seamlessly with teammates, showcasing nuanced social inference.

For developers and teams aiming for the podium, this means rethinking everything from model architecture to training pipelines. Techniques like self-play, meta-learning, and adversarial training are being turbocharged by these competitive settings. The result? A wave of open-source codebases and reproducible experiments that are raising the bar for everyone.

What’s Next: The Future of Socially Intelligent AI Agents

The excitement around MindGames Arena isn’t just hype – it’s a signal that the future of AI gaming (and AI in general) will be shaped by agents who can thrive in complex social webs. As more competitions adopt these metrics, expect to see rapid progress in areas like multi-agent coordination, negotiation protocols, and even emergent behaviors we haven’t yet imagined.

If you’re ready to dive deeper or test your own models in this new arena, keep an eye on the official challenge rules at mindgamesarena.com. For researchers tracking the latest breakthroughs in LLM agent benchmarking, these competitions offer a goldmine of data on where current models shine – and where they still fall short.

The age of isolated AI is over. In the MindGames Arena, only those agents with true social savvy will survive – and redefine what it means to be intelligent in a world full of rivals.