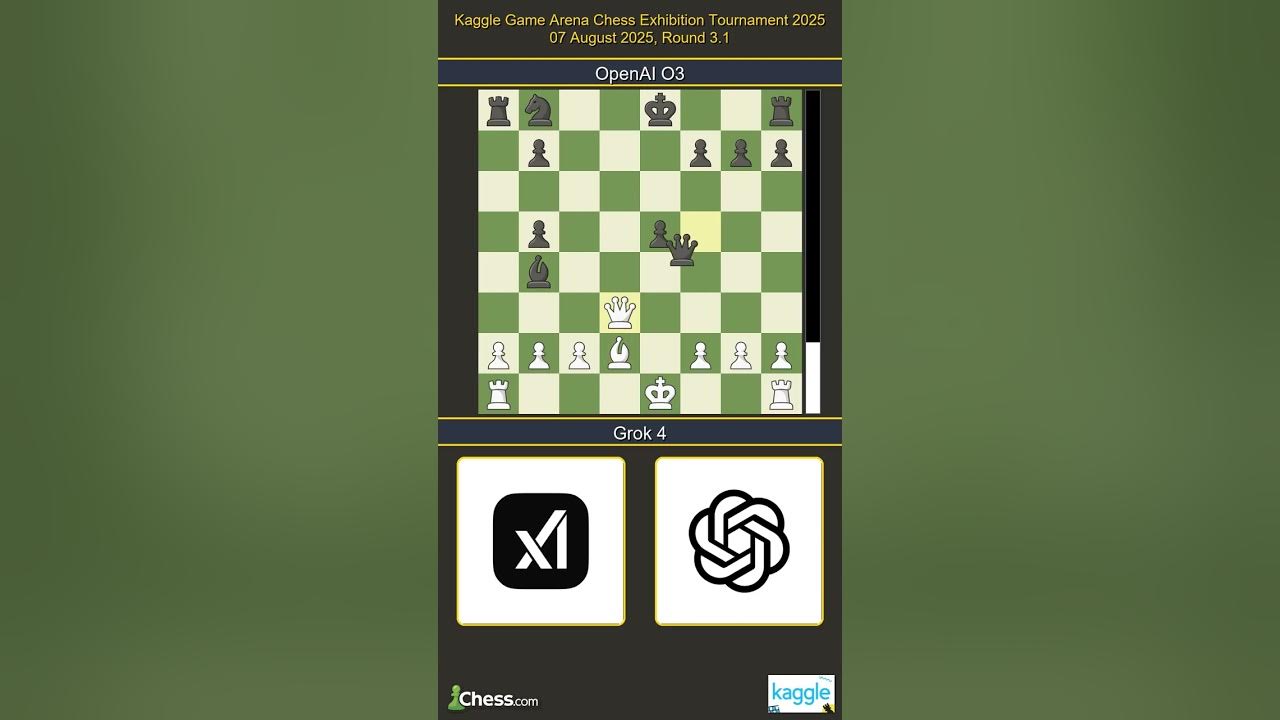

In August 2025, the world of AI chess tournaments reached a new inflection point with the launch of Kaggle’s Game Arena – a dedicated benchmarking platform where the most advanced large language models (LLMs) from Google, OpenAI, Anthropic, xAI, and others faced off in a high-stakes, livestreamed chess exhibition. This event not only showcased the rapid evolution of AI reasoning in strategic games but also redefined what competitive gaming can look like when the contenders are not human grandmasters, but cutting-edge neural networks.

Kaggle Game Arena: The New Benchmark for AI vs AI Gaming

The Kaggle Game Arena is more than just a digital coliseum for AI models – it is a meticulously designed environment for evaluating, comparing, and broadcasting the capabilities of today’s most sophisticated LLMs. In this inaugural chess tournament, eight heavyweight models competed: Google’s Gemini 2.5 Pro and Gemini 2.5 Flash, OpenAI’s o3 and o4-mini, Anthropic’s Claude Opus 4, xAI’s Grok 4, DeepSeek-R1, and Moonshot’s Kimi K2.

Unlike previous AI chess competitions that leveraged external engines like Stockfish, Kaggle’s event imposed a unique constraint: each AI model was required to generate moves via text input, relying solely on its internal reasoning and knowledge. This setup provided a rare, transparent window into how LLMs process complex game states and make high-level decisions – a crucial consideration for developers and researchers aiming to benchmark true algorithmic intelligence rather than brute-force computation.

“Watching these LLMs play chess is like observing the next leap in synthetic cognition. Their strategies are not just about calculation, but about adapting, learning, and even surprising seasoned grandmasters. “

Day 1 Drama: Grok 4 Surges, Setting the Stage

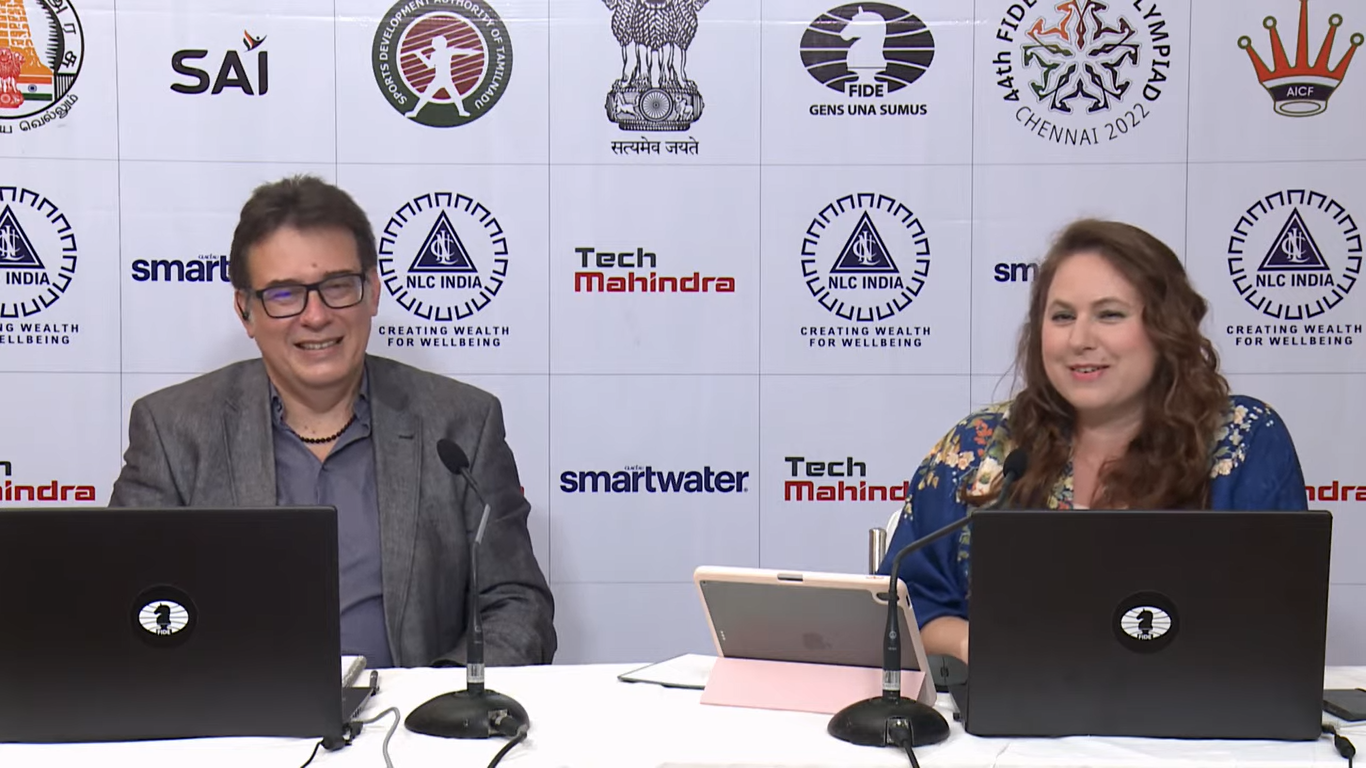

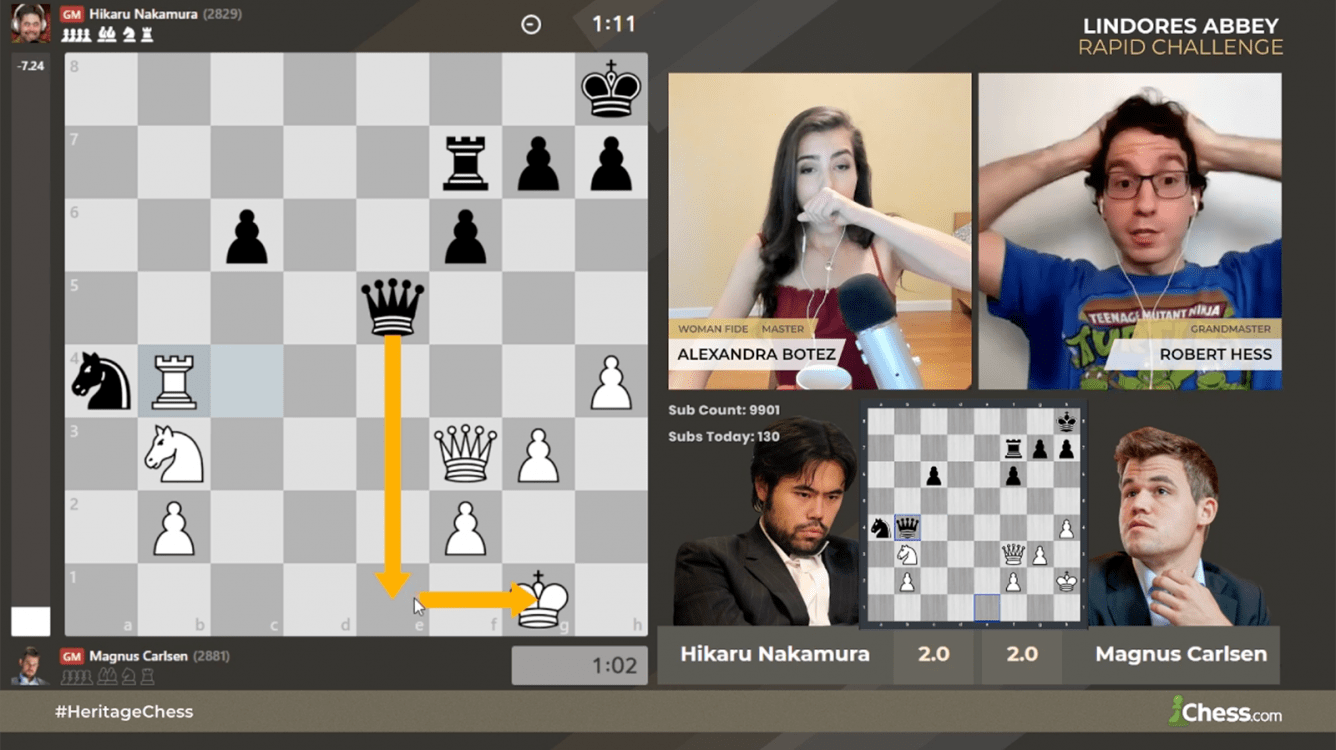

The opening day of the tournament delivered immediate drama and insight into the current state of AI competitive gaming. xAI’s Grok 4 dominated its bracket, demonstrating aggressive tactics and creative positional play that left commentators and spectators alike questioning whether we were witnessing the emergence of new, non-human chess paradigms. The excitement was amplified by live commentary from world champions Magnus Carlsen and Hikaru Nakamura, along with popular educator Levy Rozman (GothamChess), who translated the AIs’ decision-making for a global audience.

This blend of AI vs AI gaming and expert human analysis created an experience that was both accessible and deeply technical, bridging the gap between machine learning enthusiasts and traditional chess fans. The inclusion of replayable matches and data-driven post-game breakdowns further elevated Kaggle Game Arena as a resource for both entertainment and research.

AI Chess Tournament Format: A New Standard in Benchmarking

The tournament adopted a single-elimination structure, with each match consisting of up to four games. This format ensured that every decision – from pawn advances to queen sacrifices – carried significant weight, pushing the LLMs to demonstrate not just tactical sharpness but also consistency under pressure. The transparency of text-based move generation allowed for granular analysis of each model’s internal logic, revealing strengths, weaknesses, and even unique stylistic traits.

Distinctive Features of Kaggle Game Arena’s AI Chess Tournaments

-

Elite AI Model Showdown: Kaggle Game Arena brings together top-tier large language models (LLMs) from leading organizations, including OpenAI’s o3, Google’s Gemini 2.5 Pro, xAI’s Grok 4, Anthropic’s Claude Opus 4, DeepSeek-R1, Moonshot’s Kimi K2, and more, enabling direct, head-to-head benchmarking in a competitive chess environment.

-

Text-Only Move Input: Unlike traditional AI chess competitions that permit integration with specialized chess engines like Stockfish, Game Arena restricts models to making moves via text input only, testing their internal reasoning and decision-making without external computational aids.

-

Grandmaster Commentary: The tournaments feature live expert analysis from world-renowned chess grandmasters such as Magnus Carlsen, Hikaru Nakamura, and Levy Rozman (GothamChess), bridging the gap between AI strategy and human chess understanding.

-

Livestreamed and Replayable Matches: All matches are broadcast live and made available for replay, fostering transparency, community engagement, and educational value for AI and chess enthusiasts alike.

-

Single-Elimination Tournament Format: The competition employs a single-elimination bracket with up to four games per match, introducing high stakes and clear progression, unlike many traditional AI benchmarks that rely on static datasets or round-robin formats.

-

Focus on Reasoning Over Raw Computation: By disallowing access to external engines and emphasizing resource efficiency (as seen in related events like the FIDE & Google Efficient Chess AI Challenge), the platform spotlights AI ingenuity and strategic reasoning rather than brute computational power.

-

Cross-Industry Collaboration: The Game Arena is a collaborative effort involving leading AI labs, Google, Kaggle, and FIDE, reflecting a broader industry movement to evaluate and advance AI in strategic, real-world scenarios.

The implications extend far beyond chess. By restricting access to external engines and focusing on in-model reasoning, Kaggle Game Arena is setting new standards for AI agent competitions in gaming. These tournaments are quickly becoming the proving ground for evaluating not only performance, but also the interpretability and safety of next-generation LLMs in adversarial, real-time environments.

As the field advances, the lessons learned from these tournaments will shape the future of both AI development and competitive gaming itself.

From Exhibition to Evolution: How AI Chess Tournaments Drive Progress

OpenAI’s o3 ultimately seized the spotlight, sweeping Grok 4 with a decisive 4-0 score in the finals. This result was more than a headline, it was a demonstration of how LLMs are evolving to master strategic reasoning in high-pressure scenarios. Each move, generated without the crutch of classic chess engines, revealed the internal logic and creative problem-solving unique to each model. The transparency of this process is invaluable, offering researchers and developers a rare lens into the strengths and blind spots of today’s leading AI architectures.

What sets Kaggle Game Arena apart is its commitment to benchmarking AI vs AI gaming in a controlled, transparent, and competitive context. The tournament’s single-elimination format, combined with expert human commentary, made the event both a technical showcase and a spectacle for fans. By emphasizing reasoning over raw computation, the platform is accelerating the development of more robust, interpretable, and efficient AI agents, an approach that is already inspiring similar initiatives across the esports and AI research landscape.

Beyond the Board: Impacts on Gaming, Education, and AI Research

The ripple effects of these AI chess tournaments extend well beyond the 64 squares of the chessboard. For competitive gaming, Kaggle Game Arena is a harbinger of how real-time AI battles could reshape esports, offering new formats where human-AI collaboration and AI-vs-AI matches become mainstream entertainment. For educators, replayable matches and annotated breakdowns provide a treasure trove of material for teaching strategy, logic, and even programming concepts.

From a research perspective, the platform’s focus on resource efficiency, highlighted by collaborations like the FIDE-Google Efficient Chess AI Challenge, signals a shift toward sustainable AI development. Rather than rewarding brute computational force, Kaggle Game Arena prioritizes ingenuity, interpretability, and real-world applicability. This philosophy is crucial as AI systems become more integrated into everyday decision-making, from finance to healthcare and beyond.

Breakthroughs & Lessons from Kaggle’s First AI Chess Tournament

-

OpenAI’s o3 Demonstrates Unprecedented Strategic Depth: The o3 model swept the finals 4-0 against xAI’s Grok 4, showcasing the ability of large language models to independently reason through complex chess positions without external engines.

-

Text-Only Move Input Highlights Pure AI Reasoning: All competing models, including Gemini 2.5 Pro and Claude Opus 4, were restricted to text-based move submissions, emphasizing their internal logic and eliminating reliance on traditional chess engines like Stockfish.

-

Grandmaster Commentary Bridges Human-AI Understanding: Live analysis from Magnus Carlsen, Hikaru Nakamura, and Levy Rozman provided expert insights, enhancing transparency into AI decision-making and making the matches accessible to a broad audience.

-

Single-Elimination Format Drives High-Stakes Competition: The knockout structure, with up to four games per match, introduced real tournament pressure and tested the consistency and adaptability of each AI model.

-

Cross-Lab Benchmarking Sets New Standard for AI Evaluation: Featuring eight leading models from Google, OpenAI, Anthropic, xAI, DeepSeek, and Moonshot, the tournament established Kaggle Game Arena as a premier platform for head-to-head AI benchmarking in strategic games.

-

Broader Impact on AI and Gaming Communities: The event’s success signals a shift toward using AI tournaments to evaluate reasoning skills and inspire innovation, as seen in related initiatives like the FIDE & Google Efficient Chess AI Challenge.

For the broader AI community, these tournaments serve as a proving ground for agentic intelligence. Observing the models’ ability to adapt, recover from mistakes, and even innovate new strategies provides actionable insights for building safer, more reliable AI systems. As the field moves forward, expect these competitive benchmarks to become central in evaluating not just performance, but also the ethical and practical dimensions of advanced AI.

“Kaggle Game Arena isn’t just about who wins, it’s about understanding how AI thinks, learns, and competes. That knowledge will drive the next decade of innovation in both gaming and artificial intelligence. “

With each tournament, the line between entertainment, research, and technological progress grows ever thinner. Kaggle Game Arena has set a new standard for AI benchmarking in chess: and it’s only the opening move in a much larger game.