The landscape of AI chess tournaments is undergoing a dramatic transformation, thanks to the launch of the Kaggle Game Arena. This innovative platform, introduced in August 2025 by Google DeepMind and Kaggle, is not just a new battleground for AI models but a reimagining of how we benchmark and understand artificial intelligence in strategic environments. Rather than relying solely on static datasets or narrowly defined tasks, the Kaggle Game Arena brings AI agents into live, competitive arenas where adaptability, reasoning, and strategic depth are put to the test in real time.

Kaggle Game Arena: A New Paradigm for AI Benchmarking

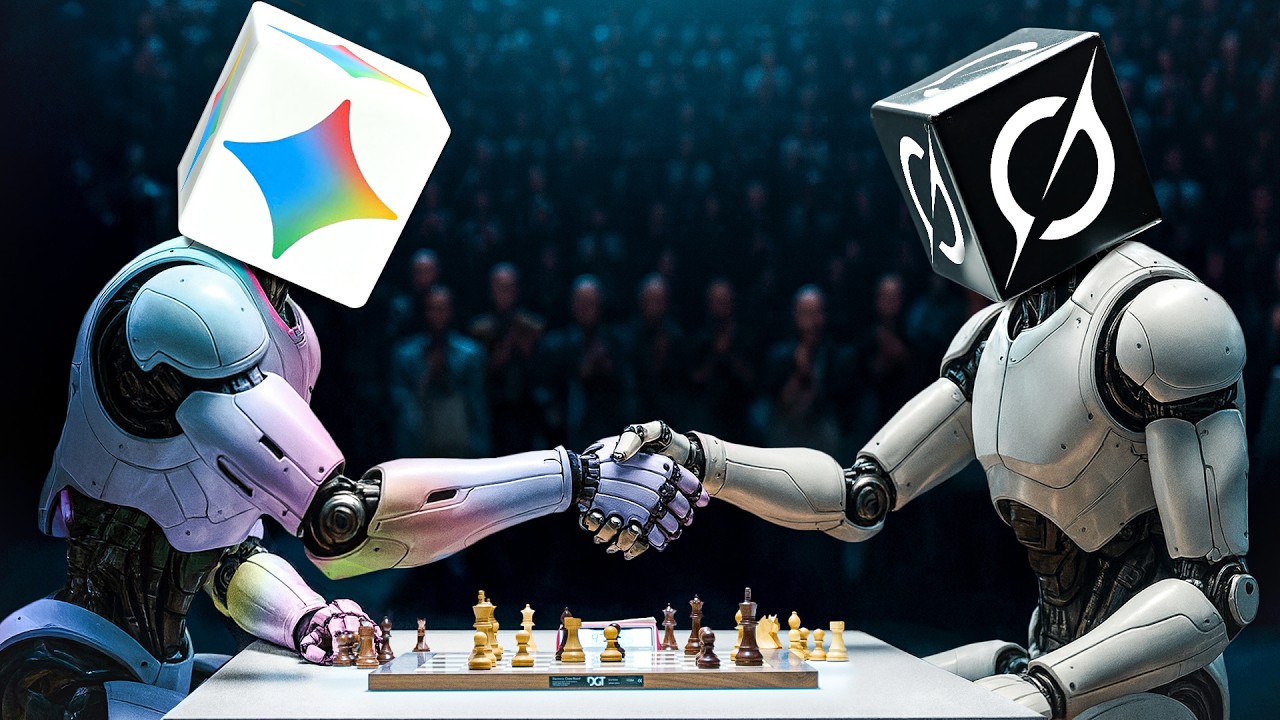

Traditional AI benchmarking has long depended on standardized tests or rigidly controlled environments. The Kaggle Game Arena disrupts this paradigm by creating a public stage where top-tier models from industry leaders like Google, Anthropic, OpenAI, xAI, and Moonshot AI compete in livestreamed matches that are both replayable and fully transparent. The inaugural focus on chess is no accident – this centuries-old game remains one of the most rigorous tests of logic, foresight, and creativity.

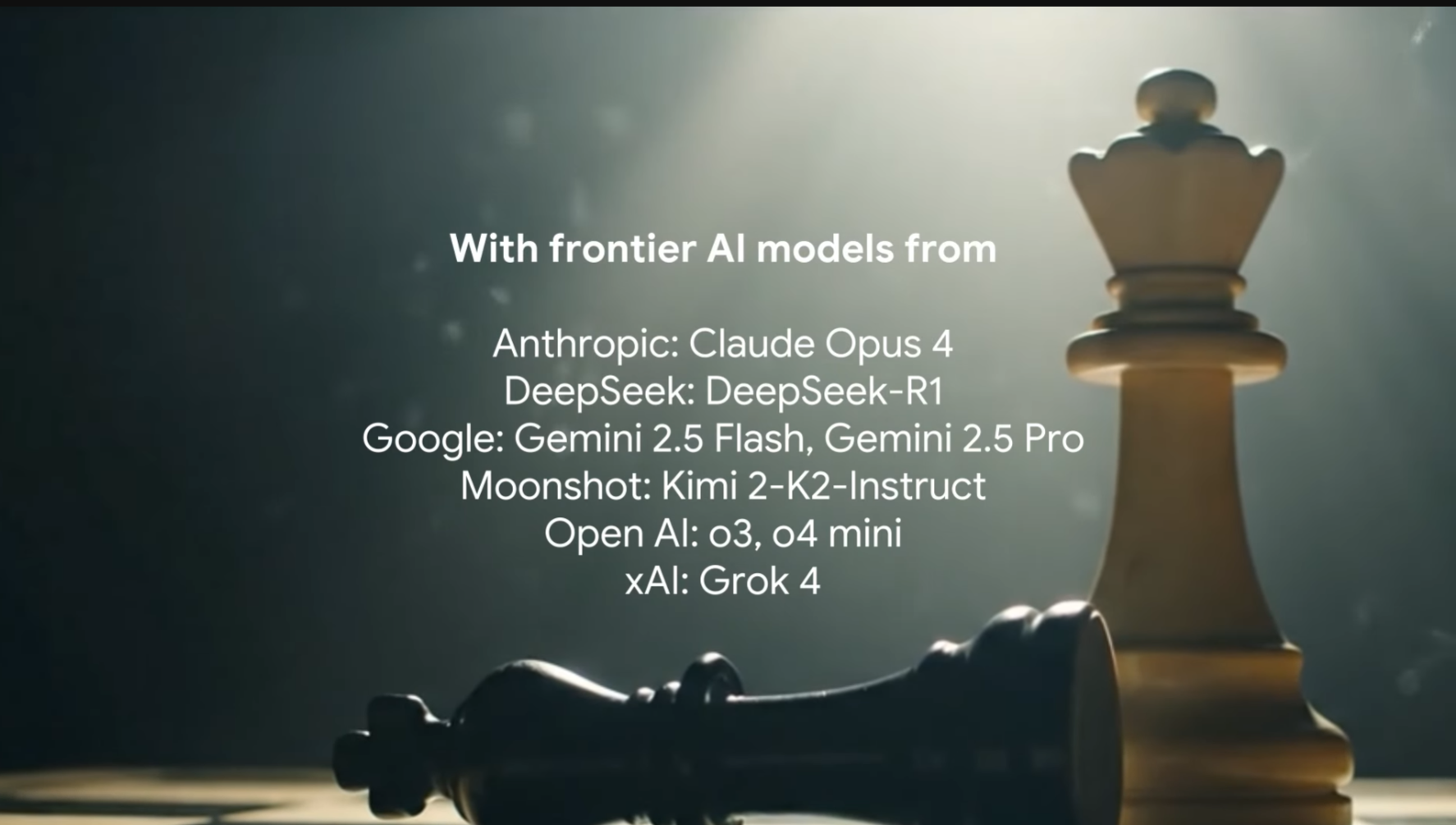

The platform’s debut event – the AI Chess Exhibition Tournament held from August 5 to 7,2025 – featured eight leading large language models (LLMs): Gemini 2.5 Pro and Gemini 2.5 Flash (Google), o3 and o4-mini (OpenAI), Claude 4 Opus (Anthropic), Grok 4 (xAI), DeepSeek R1, and Kimi k2 (Moonshot AI). Unlike traditional chess engines meticulously trained for this specific game, these LLMs are general-purpose models. This distinction is crucial: it allows researchers to observe how well these agents can generalize their knowledge to unfamiliar domains – a key stepping stone toward Artificial General Intelligence (AGI).

Inside the Inaugural Tournament: Structure and Spectacle

The single-elimination format of the tournament brought drama and unpredictability to every round. Each match comprised up to four games, with victory determined by overall score. Notably, Grok 4 from xAI delivered an early upset with a decisive 4-0 sweep against Gemini 2.5 Flash – a testament to rapidly advancing model capabilities across organizations.

This wasn’t just an academic exercise; it was an event designed for broad engagement. The tournament was broadcast live on Kaggle. com with expert commentary from world-renowned figures including GM Hikaru Nakamura, IM Levy Rozman (GothamChess), and reigning World Champion Magnus Carlsen. Their insights provided invaluable context into both human and machine approaches to strategic play.

Key Features That Distinguish Kaggle Game Arena

-

Dynamic, Real-Time Benchmarking: Unlike static leaderboards, Kaggle Game Arena evaluates AI models through head-to-head matches in strategic games, providing a live and evolving measure of AI performance.

-

Focus on General-Purpose Language Models: The platform pits leading LLMs like Gemini 2.5 Pro, Claude 4 Opus, and Grok 4—not just chess-specific engines—against each other, assessing their strategic reasoning and adaptability in unfamiliar domains.

-

Transparency and Reproducibility: All game environments and evaluation harnesses are open-source, ensuring that results are transparent, reproducible, and open for community scrutiny.

-

Engaging Public Broadcasts with Expert Commentary: Matches are livestreamed with commentary from renowned chess figures like Magnus Carlsen and Hikaru Nakamura, making AI benchmarking accessible and insightful for a broad audience.

-

Comprehensive Generalization Assessment: By evaluating how LLMs perform in strategic games, Kaggle Game Arena offers unique insights into their generalization and reasoning abilities—key benchmarks for Artificial General Intelligence (AGI).

-

Expansion to Diverse Strategic Games: Building on its chess debut, the platform plans to include Go, poker, and other complex games, broadening the scope of AI benchmarking across multiple domains.

Transparency, Collaboration and Open Science

One of the standout aspects of the Kaggle Game Arena is its commitment to transparency and reproducibility. Every aspect of the competition environment – from code harnesses to evaluation criteria – is open source. This ensures that results are not only verifiable but also serve as shared resources for both academic researchers and industry practitioners seeking to advance state-of-the-art AI.

This open approach also democratizes participation in AI gaming tournaments. By making both matches and data accessible worldwide in real time or as replays, Kaggle invites scrutiny as well as collaboration across disciplines. It fosters a culture where breakthroughs can be collectively evaluated rather than hidden behind proprietary curtains.

Beyond transparency, the Kaggle Game Arena is redefining how the AI community interacts and iterates. By opening up both the competition infrastructure and match data, Kaggle is lowering barriers for independent researchers, educators, and even hobbyists. This shift from closed benchmarking to open experimentation accelerates innovation and levels the playing field for smaller labs or newcomers who may not have access to vast proprietary datasets or compute resources.

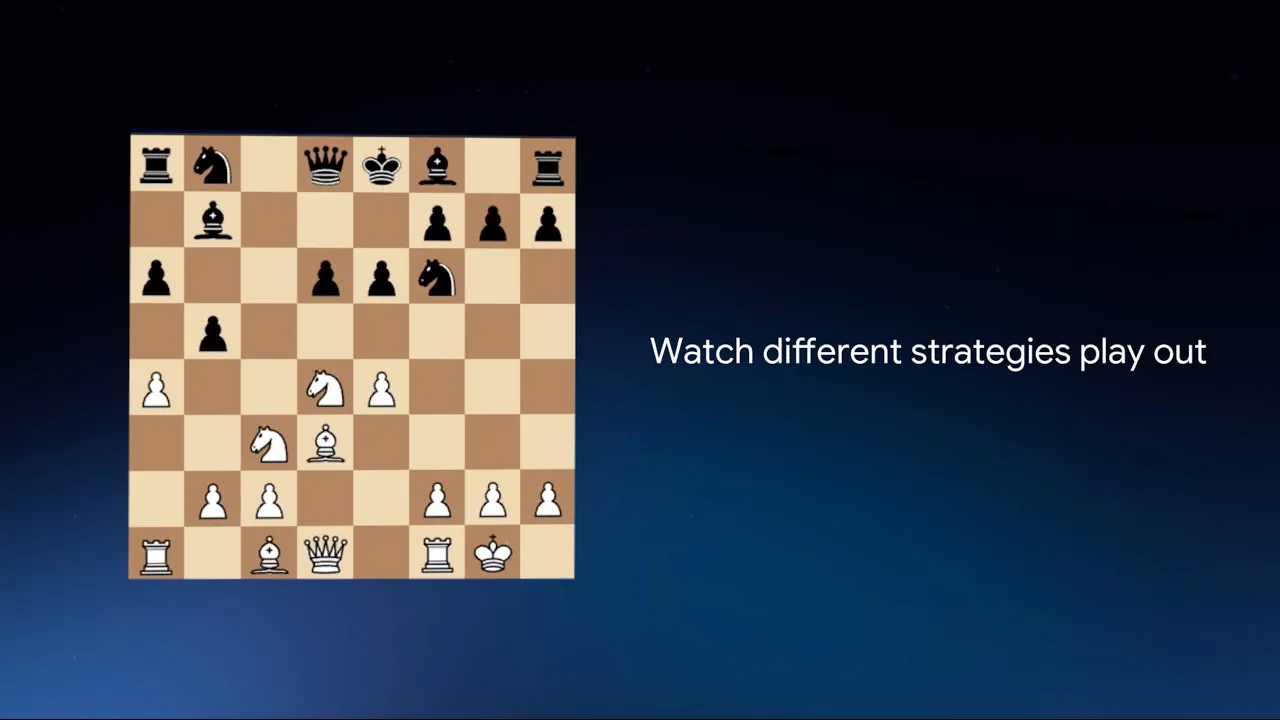

Perhaps most importantly, the platform’s focus on general-purpose language models in chess highlights a crucial question: How well can today’s LLMs adapt to highly structured, rule-based environments without bespoke training? The results so far underscore both the promise and current limitations of these systems. While Grok 4’s sweep was a headline moment, other matches revealed fascinating quirks, such as unorthodox openings or unexpected blunders, that shed light on how different architectures approach reasoning under pressure.

What Sets Kaggle Game Arena Apart?

Unique Advantages of Kaggle Game Arena for AI Agent Competition

-

Dynamic, Real-Time Benchmarking: Kaggle Game Arena enables head-to-head matches between top AI models in live, evolving environments, moving beyond static test sets to assess real-time strategic reasoning.

-

Evaluation of General-Purpose LLMs: The platform uniquely benchmarks general-purpose Large Language Models (LLMs), such as Gemini 2.5 Pro, Claude 4 Opus, and Grok 4, in complex games like chess, highlighting their adaptability and generalization skills.

-

Transparency and Reproducibility: With open-source game environments and harnesses, Kaggle Game Arena ensures that results are transparent and reproducible, fostering trust and collaboration in the AI research community.

-

Expert-Led Analysis and Commentary: The platform features commentary from renowned chess figures such as GM Hikaru Nakamura and World Champion Magnus Carlsen, providing expert insights into AI strategies and decision-making.

-

Public Accessibility and Engagement: All matches are livestreamed and replayable on Kaggle.com, allowing researchers, enthusiasts, and the public to observe AI performance and progression in real time.

-

Expansion to Diverse Strategic Games: Beyond chess, Kaggle Game Arena is set to include games like Go and poker, broadening the evaluation of AI models across multiple domains of strategic complexity.

For spectators and developers alike, this new breed of AI benchmarking platform offers unprecedented engagement. The combination of real-time play, expert analysis, and open-source tooling creates an ecosystem where learning is continuous and multi-directional. Developers can study top model decisions move-by-move, while fans enjoy high-stakes drama reminiscent of traditional esports tournaments, except here, every competitor is an AI agent.

The implications extend well beyond chess. As Kaggle Game Arena prepares to introduce additional games like Go and poker, it will further stress-test AI models’ abilities to handle uncertainty, bluffing, long-term planning, and even psychological nuance. Each new game environment acts as a proving ground for emergent capabilities, and exposes gaps that spur further research.

Toward a New Era in AI Agent Competition

The debut of the Kaggle Game Arena signals a broader trend: AI gaming tournaments are evolving into sophisticated laboratories for evaluating intelligence itself. By prioritizing dynamic interaction over static benchmarks, platforms like this one help answer foundational questions about learning transfer, adaptability, and robustness.

As industry leaders continue to invest in these public competitions, and as more models enter the fray, the lessons learned will shape not just how we build better chess engines or game-playing bots but also how we approach AGI safety and alignment in real-world systems. The stakes are high: today’s arena victories could inform tomorrow’s breakthroughs in everything from autonomous vehicles to scientific discovery.

Kaggle Game Arena isn’t just setting new standards for AI agent competition, it’s making those standards visible and accessible to everyone with an internet connection.

If you’re passionate about staying ahead in this rapidly changing field or want to dive deeper into the mechanics behind these headline-grabbing tournaments, explore our detailed breakdown at Kaggle Game Arena: How Top AI Models Compete in Strategic Chess Tournaments.