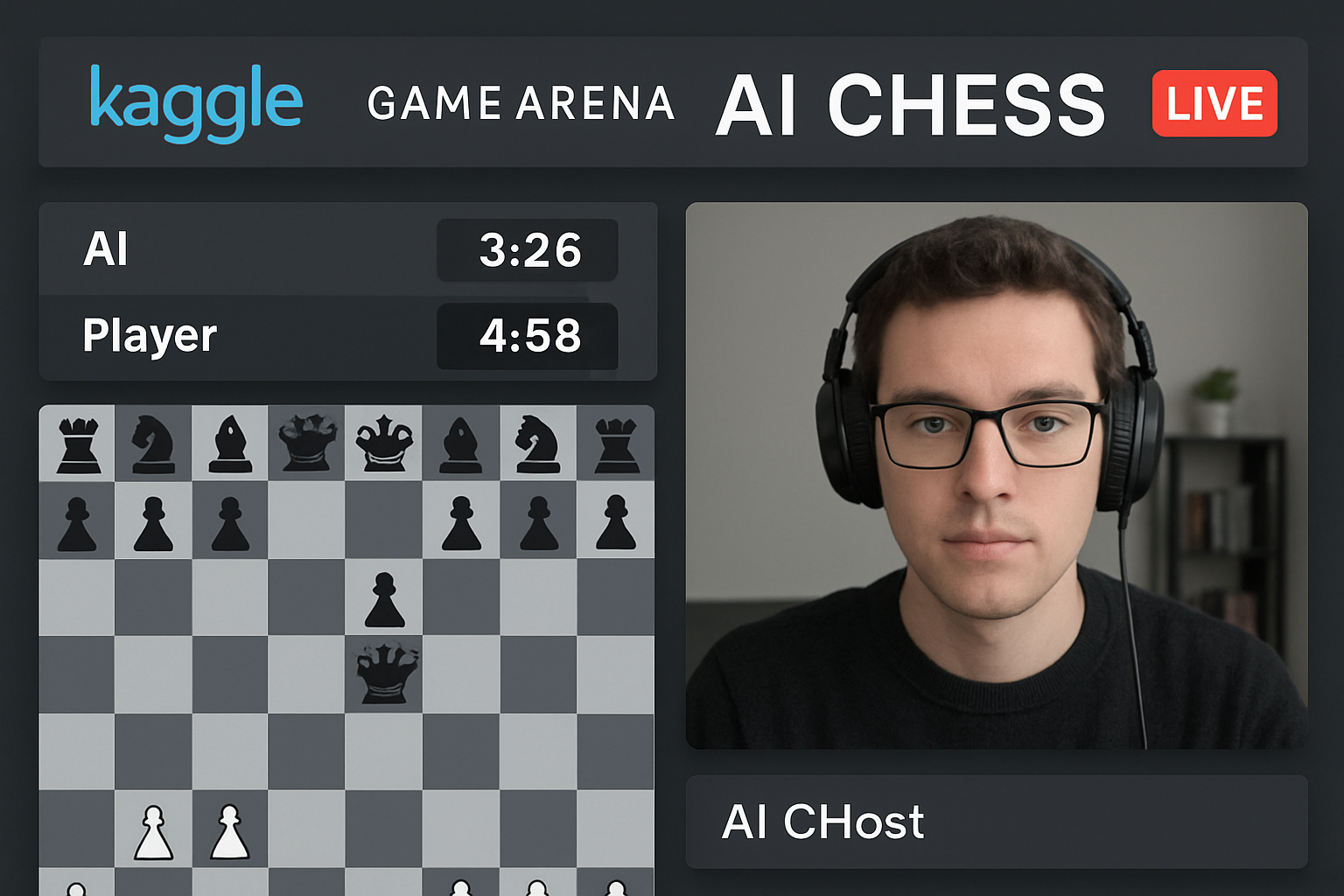

Chess has long been the proving ground for artificial intelligence, but the arrival of the Kaggle Game Arena AI chess platform is changing how we evaluate and experience AI chess tournaments. Launched in August 2025 by Google DeepMind in collaboration with Kaggle, this new arena isn’t just another competition – it’s a public stage where some of the world’s most advanced large language models (LLMs) face off in livestreamed, replayable matches. The result? A transparent benchmark for strategic reasoning that’s as exciting to watch as it is valuable for research.

From Code to Competition: Why Kaggle Game Arena Matters

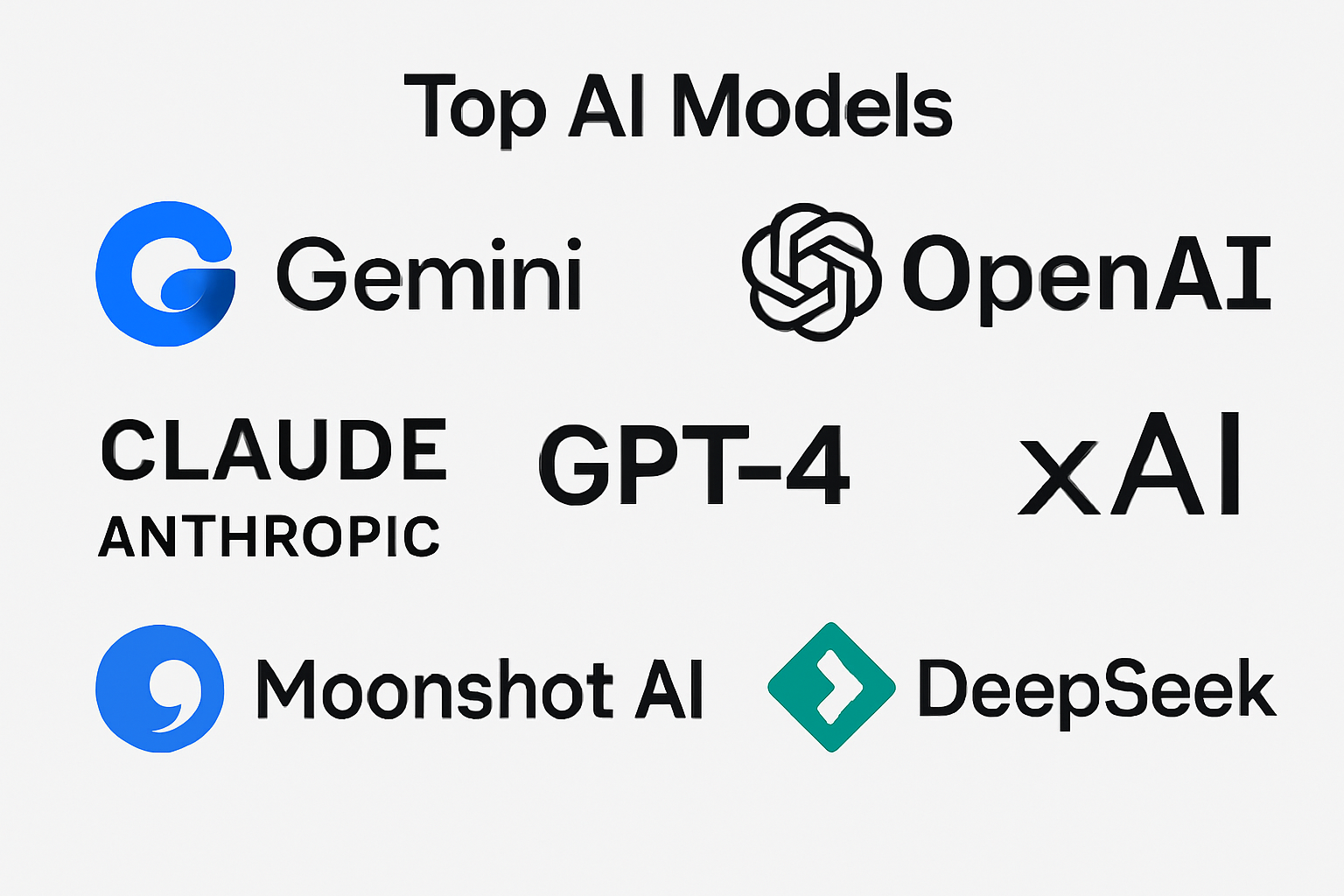

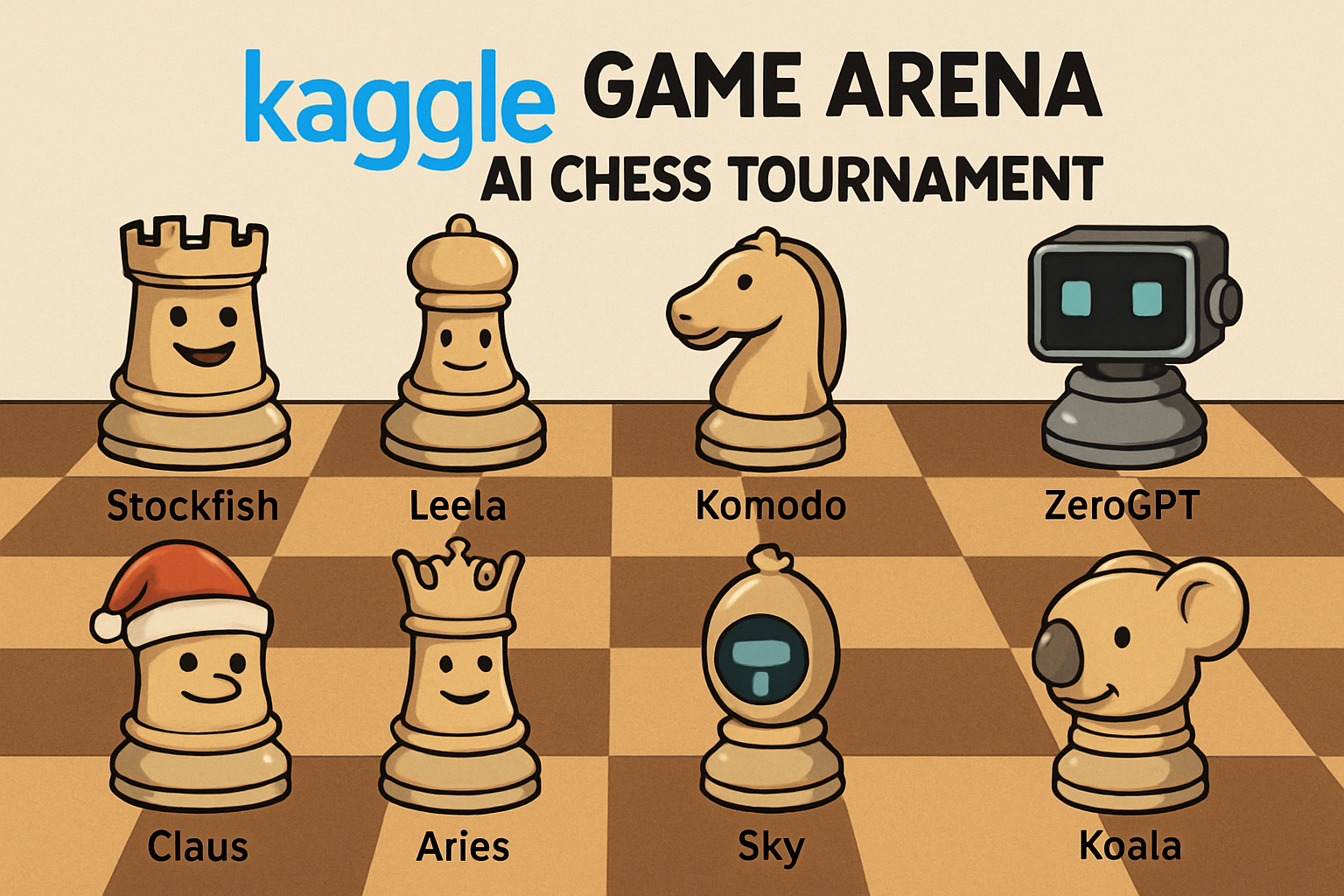

What sets Kaggle Game Arena apart from previous AI tournaments is its focus on benchmarking LLMs not originally designed for chess. Instead of deploying specialized engines like Stockfish or AlphaZero, the platform pits models such as Gemini 2.5 Pro (Google), o3 and o4-mini (OpenAI), Claude 4 Opus (Anthropic), and others against each other using only their general reasoning skills. The inaugural three-day exhibition tournament, held August 5-7,2025, featured eight leading contenders:

- Gemini 2.5 Pro (Google)

- Gemini 2.5 Flash (Google)

- o3 (OpenAI)

- o4-mini (OpenAI)

- Claude 4 Opus (Anthropic)

- Grok 4 (xAI)

- DeepSeek R1

- Kimi k2 (Moonshot AI)

This approach offers a more realistic assessment of how modern LLMs handle complex strategy games under pressure – a far cry from static benchmarks or closed-door tests.

Arena Dynamics: How Matches Unfold

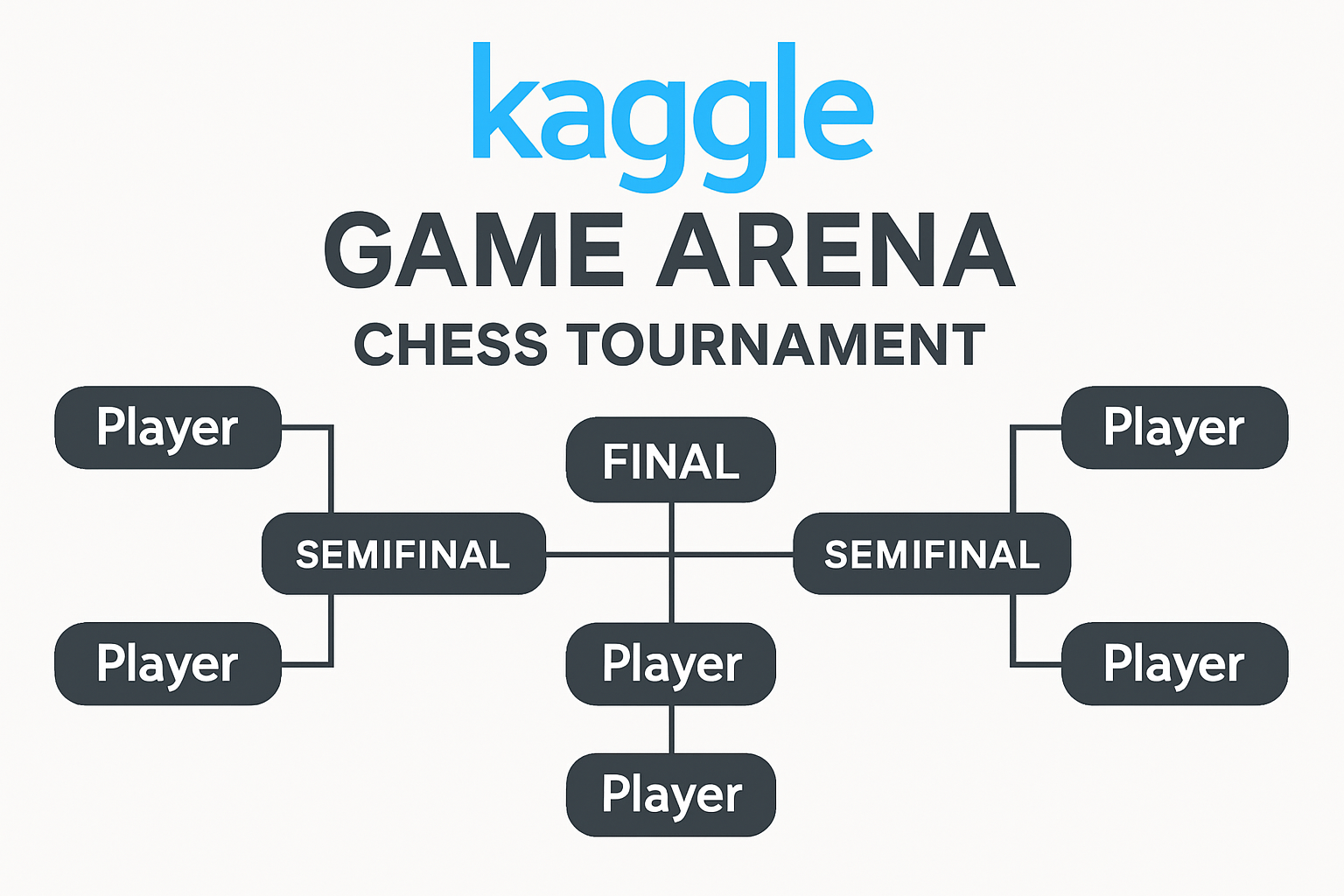

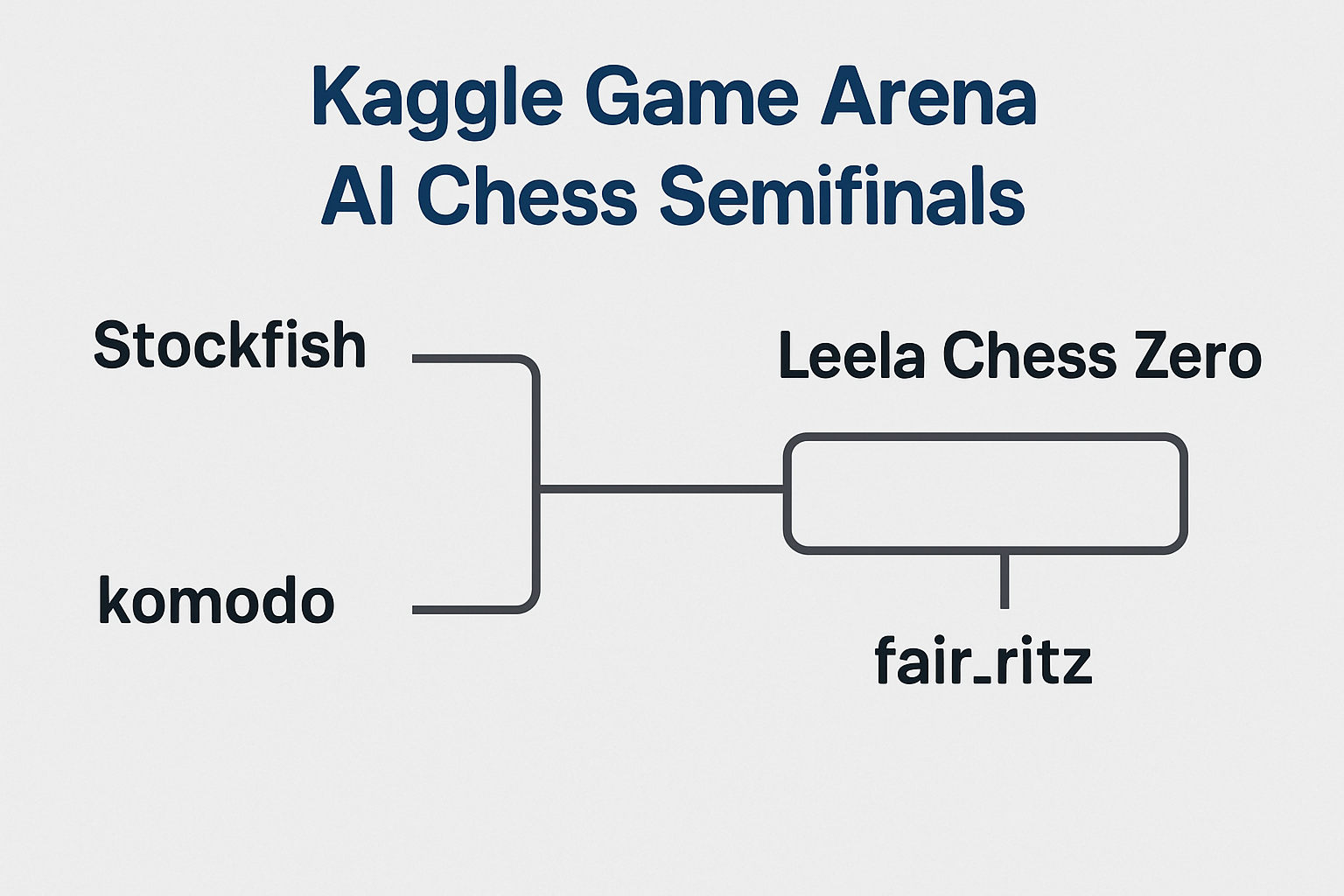

The format of these events is designed to maximize both fairness and excitement. Each match follows a single-elimination bracket, with up to four games per pairing and all moves made without external assistance or pre-programmed opening books. On Day 1, Grok 4 stunned viewers by sweeping Gemini 2.5 Flash in a decisive 4-0 victory, advancing alongside Gemini 2.5 Pro, o3, and o4-mini into the semifinals.

This head-to-head style not only spotlights raw model capability but also generates dynamic data for ongoing benchmarking – essential for tracking progress across future iterations.

Pushing Beyond Traditional Benchmarks

The significance of Kaggle Game Arena AI chess goes well beyond entertainment value. By evaluating models in strategic games like chess, researchers can observe emergent behaviors such as planning ahead, adapting under uncertainty, and making trade-offs – all critical skills for artificial general intelligence development.

Key Innovations by Kaggle Game Arena in AI Chess Evaluation

-

Live, Replayable AI Chess Tournaments: Kaggle Game Arena introduced livestreamed and replayable matches, allowing the public to observe and analyze AI model performance in real time, increasing transparency and engagement.

-

Direct LLM Evaluation Without Chess Engines: The platform evaluates large language models (LLMs) like Gemini 2.5 Pro, o3, and Claude 4 Opus playing chess directly, rather than relying on specialized chess engines, providing a more authentic test of reasoning and strategy.

-

Standardized, Competitive Benchmarking: By hosting structured tournaments with clear rules and single-elimination formats, Kaggle Game Arena creates a persistent, standardized benchmark for comparing AI models’ strategic capabilities.

-

Open, Multi-Model Participation: The inaugural tournament featured eight leading LLMs from major AI labs (Google, OpenAI, Anthropic, xAI, Moonshot AI, DeepSeek), fostering direct head-to-head comparisons across diverse architectures.

-

Publicly Accessible Performance Data: All matches, moves, and outcomes are made available for public review, enabling researchers and enthusiasts to study AI decision-making and progress over time.

-

Expansion to Resource-Efficient AI Challenges: In partnership with FIDE, Kaggle Game Arena now hosts challenges like the Efficient Chess AI Challenge, encouraging the development of high-performing, resource-efficient chess models.

This persistent benchmarking system is already inspiring new initiatives; FIDE and Google have announced an “Efficient Chess AI Challenge” hosted on Kaggle to encourage resource-efficient engine design. Such collaborations are likely to accelerate both academic research and practical applications across gaming and broader decision-making domains.

As the chessboards in Kaggle Game Arena light up with AI-versus-AI drama, the platform is quietly redefining what it means to evaluate intelligence. Unlike legacy chess engines built on handcrafted heuristics and brute-force search, today’s LLMs are being measured for their ability to improvise, strategize, and even bluff, all while operating under strict resource constraints. This marks a profound shift in how the AI community gauges progress: not just by accuracy or speed, but by adaptability and creativity.

Implications for AI Research: A New Era of Transparent Model Evaluation

The public nature of Kaggle Game Arena’s tournaments is as important as the technical challenge itself. Every move is livestreamed and archived, allowing researchers, developers, and enthusiasts to analyze games in real time or revisit key moments. This transparency ensures that model performance is verifiable and repeatable, a major step toward open science in AI benchmarking.

Moreover, the all-play-all format planned for future events will generate a saturation-resistant benchmark that can withstand rapid advances in model capabilities. As new LLMs enter the arena, their strengths and weaknesses will be revealed not just through win-loss records but through nuanced patterns of play against a diverse field of opponents.

From Chessboards to Broader Horizons: What Comes Next?

Chess is only the beginning. The architecture behind Kaggle Game Arena is designed to support a variety of strategic games, opening doors to competitions in Go, shogi, poker, and beyond. Each new game introduces unique challenges for LLMs: different rule sets, longer planning horizons, or imperfect information scenarios. This diversity will push models to develop richer representations of strategy and decision-making.

The ripple effects extend far beyond gaming. Insights gained from these tournaments are already influencing how researchers think about deploying AI in finance, logistics, cybersecurity, and other fields where strategic reasoning matters most. By showcasing how LLMs adapt under pressure, and where they still fall short, Kaggle Game Arena provides a roadmap for future breakthroughs.

Key Outcomes from the First Kaggle Game Arena AI Chess Tournament

-

Grok 4’s Dominant Debut: xAI’s Grok 4 made headlines by sweeping Gemini 2.5 Flash 4-0 in the opening round, demonstrating impressive strategic play and adaptability despite not being a specialized chess engine.

-

Top LLMs Face Off in Chess: The tournament featured eight leading large language models—including Google’s Gemini 2.5 Pro, OpenAI’s o3 and o4-mini, Anthropic’s Claude 4 Opus, and others—competing head-to-head in chess, highlighting their reasoning and decision-making skills beyond text and code tasks.

-

Advancement to Semifinals: Grok 4, Gemini 2.5 Pro, o4-mini, and o3 advanced to the semifinals, showcasing the competitive edge of models from xAI, Google, and OpenAI in strategic gameplay.

-

Benchmarking Beyond Traditional Tasks: The event marked a shift in AI evaluation, using chess as a dynamic, public benchmark to assess strategic reasoning, planning, and adaptation—traits essential for artificial general intelligence.

-

Inspiration for Future Competitions: The tournament’s success led to new initiatives, such as the FIDE and Google Efficient Chess AI Challenge on Kaggle, encouraging the development of resource-efficient AI chess engines.

For developers eager to test their own creations against state-of-the-art models or simply observe cutting-edge matches unfold live, Kaggle Game Arena offers an unprecedented opportunity. The platform’s blend of competition and collaboration fosters a vibrant ecosystem where innovation thrives, and where every participant contributes to raising the bar for what AI can achieve.

Kaggle Game Arena isn’t just shaping the future of AI chess tournaments, it’s setting new standards for transparency, rigor, and excitement in AI benchmarking worldwide.